关键词:

安装部署k8s_v1.11

K8s简介

1.背景介绍

云计算飞速发展

- IaaS

- PaaS

- SaaS

Docker技术突飞猛进

- 一次构建,到处运行

- 容器的快速轻量

- 完整的生态环境

2.什么是kubernetes

首先,他是一个全新的基于容器技术的分布式架构领先方案。Kubernetes(k8s)是Google开源的容器集群管理系统(谷歌内部:Borg)。在Docker技术的基础上,为容器化的应用提供部署运行、资源调度、服务发现和动态伸缩等一系列完整功能,提高了大规模容器集群管理的便捷性。

Kubernetes是一个完备的分布式系统支撑平台,具有完备的集群管理能力,多扩多层次的安全防护和准入机制、多租户应用支撑能力、透明的服务注册和发现机制、內建智能负载均衡器、强大的故障发现和自我修复能力、服务滚动升级和在线扩容能力、可扩展的资源自动调度机制以及多粒度的资源配额管理能力。同时Kubernetes提供完善的管理工具,涵盖了包括开发、部署测试、运维监控在内的各个环节。

Kubernetes中,Service是分布式集群架构的核心,一个Service对象拥有如下关键特征:

? 拥有一个唯一指定的名字

? 拥有一个虚拟IP(Cluster IP、Service IP、或VIP)和端口号

? 能够体统某种远程服务能力

? 被映射到了提供这种服务能力的一组容器应用上

Service的服务进程目前都是基于Socket通信方式对外提供服务,比如Redis、Memcache、MySQL、Web Server,或者是实现了某个具体业务的一个特定的TCP Server进程,虽然一个Service通常由多个相关的服务进程来提供服务,每个服务进程都有一个独立的Endpoint(IP+Port)访问点,但Kubernetes能够让我们通过服务连接到指定的Service上。有了Kubernetes内奸的透明负载均衡和故障恢复机制,不管后端有多少服务进程,也不管某个服务进程是否会由于发生故障而重新部署到其他机器,都不会影响我们队服务的正常调用,更重要的是这个Service本身一旦创建就不会发生变化,意味着在Kubernetes集群中,我们不用为了服务的IP地址的变化问题而头疼了。

容器提供了强大的隔离功能,所有有必要把为Service提供服务的这组进程放入容器中进行隔离。为此,Kubernetes设计了Pod对象,将每个服务进程包装到相对应的Pod中,使其成为Pod中运行的一个容器。为了建立Service与Pod间的关联管理,Kubernetes给每个Pod贴上一个标签Label,比如运行MySQL的Pod贴上name=mysql标签,给运行PHP的Pod贴上name=php标签,然后给相应的Service定义标签选择器Label Selector,这样就能巧妙的解决了Service于Pod的关联问题。

在集群管理方面,Kubernetes将集群中的机器划分为一个Master节点和一群工作节点Node,其中,在Master节点运行着集群管理相关的一组进程kube-apiserver、kube-controller-manager和kube-scheduler,这些进程实现了整个集群的资源管理、Pod调度、弹性伸缩、安全控制、系统监控和纠错等管理能力,并且都是全自动完成的。Node作为集群中的工作节点,运行真正的应用程序,在Node上Kubernetes管理的最小运行单元是Pod。Node上运行着Kubernetes的kubelet、kube-proxy服务进程,这些服务进程负责Pod的创建、启动、监控、重启、销毁以及实现软件模式的负载均衡器。

在Kubernetes集群中,它解决了传统IT系统中服务扩容和升级的两大难题。你只需为需要扩容的Service关联的Pod创建一个Replication Controller简称(RC),则该Service的扩容及后续的升级等问题将迎刃而解。在一个RC定义文件中包括以下3个关键信息。

? 目标Pod的定义

? 目标Pod需要运行的副本数量(Replicas)

? 要监控的目标Pod标签(Label)

在创建好RC后,Kubernetes会通过RC中定义的的Label筛选出对应Pod实例并实时监控其状态和数量,如果实例数量少于定义的副本数量,则会根据RC中定义的Pod模板来创建一个新的Pod,然后将新Pod调度到合适的Node上启动运行,知道Pod实例的数量达到预定目标,这个过程完全是自动化。

Kubernetes优势:

- 容器编排

- 轻量级

- 开源

- 弹性伸缩

- 负载均衡

?Kubernetes的核心概念

1.Master

k8s集群的管理节点,负责管理集群,提供集群的资源数据访问入口。拥有Etcd存储服务(可选),运行Api Server进程,Controller Manager服务进程及Scheduler服务进程,关联工作节点Node。Kubernetes API server提供HTTP Rest接口的关键服务进程,是Kubernetes里所有资源的增、删、改、查等操作的唯一入口。也是集群控制的入口进程;Kubernetes Controller Manager是Kubernetes所有资源对象的自动化控制中心;Kubernetes Schedule是负责资源调度(Pod调度)的进程

2.Node

Node是Kubernetes集群架构中运行Pod的服务节点(亦叫agent或minion)。Node是Kubernetes集群操作的单元,用来承载被分配Pod的运行,是Pod运行的宿主机。关联Master管理节点,拥有名称和IP、系统资源信息。运行docker eninge服务,守护进程kunelet及负载均衡器kube-proxy.

? 每个Node节点都运行着以下一组关键进程

? kubelet:负责对Pod对于的容器的创建、启停等任务

? kube-proxy:实现Kubernetes Service的通信与负载均衡机制的重要组件

? Docker Engine(Docker):Docker引擎,负责本机容器的创建和管理工作

Node节点可以在运行期间动态增加到Kubernetes集群中,默认情况下,kubelet会想master注册自己,这也是Kubernetes推荐的Node管理方式,kubelet进程会定时向Master汇报自身情报,如操作系统、Docker版本、CPU和内存,以及有哪些Pod在运行等等,这样Master可以获知每个Node节点的资源使用情况,冰实现高效均衡的资源调度策略。、

3.Pod

运行于Node节点上,若干相关容器的组合。Pod内包含的容器运行在同一宿主机上,使用相同的网络命名空间、IP地址和端口,能够通过localhost进行通。Pod是Kurbernetes进行创建、调度和管理的最小单位,它提供了比容器更高层次的抽象,使得部署和管理更加灵活。一个Pod可以包含一个容器或者多个相关容器。

Pod其实有两种类型:普通Pod和静态Pod,后者比较特殊,它并不存在Kubernetes的etcd存储中,而是存放在某个具体的Node上的一个具体文件中,并且只在此Node上启动。普通Pod一旦被创建,就会被放入etcd存储中,随后会被Kubernetes Master调度到摸个具体的Node上进行绑定,随后该Pod被对应的Node上的kubelet进程实例化成一组相关的Docker容器冰启动起来,在。在默认情况下,当Pod里的某个容器停止时,Kubernetes会自动检测到这个问起并且重启这个Pod(重启Pod里的所有容器),如果Pod所在的Node宕机,则会将这个Node上的所有Pod重新调度到其他节点上。

4.Replication Controller

Replication Controller用来管理Pod的副本,保证集群中存在指定数量的Pod副本。集群中副本的数量大于指定数量,则会停止指定数量之外的多余容器数量,反之,则会启动少于指定数量个数的容器,保证数量不变。Replication Controller是实现弹性伸缩、动态扩容和滚动升级的核心。

5.Service

Service定义了Pod的逻辑集合和访问该集合的策略,是真实服务的抽象。Service提供了一个统一的服务访问入口以及服务代理和发现机制,关联多个相同Label的Pod,用户不需要了解后台Pod是如何运行。

外部系统访问Service的问题

首先需要弄明白Kubernetes的三种IP这个问题

Node IP:Node节点的IP地址

Pod IP: Pod的IP地址

Cluster IP:Service的IP地址

首先,Node IP是Kubernetes集群中节点的物理网卡IP地址,所有属于这个网络的服务器之间都能通过这个网络直接通信。这也表明Kubernetes集群之外的节点访问Kubernetes集群之内的某个节点或者TCP/IP服务的时候,必须通过Node IP进行通信

其次,Pod IP是每个Pod的IP地址,他是Docker Engine根据docker0网桥的IP地址段进行分配的,通常是一个虚拟的二层网络。

最后Cluster IP是一个虚拟的IP,但更像是一个伪造的IP网络,原因有以下几点

? Cluster IP仅仅作用于Kubernetes Service这个对象,并由Kubernetes管理和分配P地址

? Cluster IP无法被ping,他没有一个“实体网络对象”来响应

? Cluster IP只能结合Service Port组成一个具体的通信端口,单独的Cluster IP不具备通信的基础,并且他们属于Kubernetes集群这样一个封闭的空间。

Kubernetes集群之内,Node IP网、Pod IP网于Cluster IP网之间的通信,采用的是Kubernetes自己设计的一种编程方式的特殊路由规则。

6.Label

Kubernetes中的任意API对象都是通过Label进行标识,Label的实质是一系列的Key/Value键值对,其中key于value由用户自己指定。Label可以附加在各种资源对象上,如Node、Pod、Service、RC等,一个资源对象可以定义任意数量的Label,同一个Label也可以被添加到任意数量的资源对象上去。Label是Replication Controller和Service运行的基础,二者通过Label来进行关联Node上运行的Pod。

我们可以通过给指定的资源对象捆绑一个或者多个不同的Label来实现多维度的资源分组管理功能,以便于灵活、方便的进行资源分配、调度、配置等管理工作。

一些常用的Label如下:

? 版本标签:"release":"stable","release":"canary"......

? 环境标签:"environment":"dev","environment":"qa","environment":"production"

? 架构标签:"tier":"frontend","tier":"backend","tier":"middleware"

? 分区标签:"partition":"customerA","partition":"customerB"

? 质量管控标签:"track":"daily","track":"weekly"

Label相当于我们熟悉的标签,给某个资源对象定义一个Label就相当于给它大了一个标签,随后可以通过Label Selector(标签选择器)查询和筛选拥有某些Label的资源对象,Kubernetes通过这种方式实现了类似SQL的简单又通用的对象查询机制。

Label Selector在Kubernetes中重要使用场景如下:

?

o kube-Controller进程通过资源对象RC上定义Label Selector来筛选要监控的Pod副本的数量,从而实现副本数量始终符合预期设定的全自动控制流程

o kube-proxy进程通过Service的Label Selector来选择对应的Pod,自动建立起每个Service岛对应Pod的请求转发路由表,从而实现Service的智能负载均衡

o 通过对某些Node定义特定的Label,并且在Pod定义文件中使用Nodeselector这种标签调度策略,kuber-scheduler进程可以实现Pod”定向调度“的特性

?Kubernetes架构和组件

?Kubernetes 组件:

Kubernetes Master控制组件,调度管理整个系统(集群),包含如下组件:

1.Kubernetes API Server

作为Kubernetes系统的入口,其封装了核心对象的增删改查操作,以RESTful API接口方式提供给外部客户和内部组件调用。维护的REST对象持久化到Etcd中存储。

2.Kubernetes Scheduler

为新建立的Pod进行节点(node)选择(即分配机器),负责集群的资源调度。组件抽离,可以方便替换成其他调度器。

3.Kubernetes Controller

负责执行各种控制器,目前已经提供了很多控制器来保证Kubernetes的正常运行。

4. Replication Controller

管理维护Replication Controller,关联Replication Controller和Pod,保证Replication Controller定义的副本数量与实际运行Pod数量一致。

5. Node Controller

管理维护Node,定期检查Node的健康状态,标识出(失效|未失效)的Node节点。

6. Namespace Controller

管理维护Namespace,定期清理无效的Namespace,包括Namesapce下的API对象,比如Pod、Service等。

7. Service Controller

管理维护Service,提供负载以及服务代理。

8.EndPoints Controller

管理维护Endpoints,关联Service和Pod,创建Endpoints为Service的后端,当Pod发生变化时,实时更新Endpoints。

9. Service Account Controller

管理维护Service Account,为每个Namespace创建默认的Service Account,同时为Service Account创建Service Account Secret。

10. Persistent Volume Controller

管理维护Persistent Volume和Persistent Volume Claim,为新的Persistent Volume Claim分配Persistent Volume进行绑定,为释放的Persistent Volume执行清理回收。

11. Daemon Set Controller

管理维护Daemon Set,负责创建Daemon Pod,保证指定的Node上正常的运行Daemon Pod。

12. Deployment Controller

管理维护Deployment,关联Deployment和Replication Controller,保证运行指定数量的Pod。当Deployment更新时,控制实现Replication Controller和 Pod的更新。

13.Job Controller

管理维护Job,为Jod创建一次性任务Pod,保证完成Job指定完成的任务数目

14. Pod Autoscaler Controller

实现Pod的自动伸缩,定时获取监控数据,进行策略匹配,当满足条件时执行Pod的伸缩动作。

?Kubernetes Node运行节点,运行管理业务容器,包含如下组件:

1.Kubelet

负责管控容器,Kubelet会从Kubernetes API Server接收Pod的创建请求,启动和停止容器,监控容器运行状态并汇报给Kubernetes API Server。

2.Kubernetes Proxy

负责为Pod创建代理服务,Kubernetes Proxy会从Kubernetes API Server获取所有的Service信息,并根据Service的信息创建代理服务,实现Service到Pod的请求路由和转发,从而实现Kubernetes层级的虚拟转发网络。

3.Docker

Node上需要运行容器服务

部署k8s

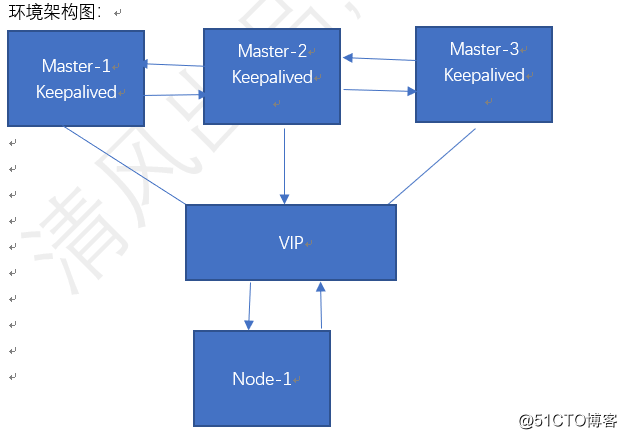

环境描述:操作系统 IP地址 主机名 软件包列表

CentOS7.3-x86_64 192.168.200.200 Master-1 Docker kubeadm

CentOS7.3-x86_64 192.168.200.201 Master-2 Docker kubeadm

CentOS7.3-x86_64 192.168.200.202 Master-3 Docker kubeadm

CentOS7.3-x86_64 192.168.200.203 Node-1 Docker

环境架构图:

部署基础环境1.1 安装 Docker-CE

1.查看master系统信息:

[[email protected] ~]# hostname

master

[[email protected] ~]# cat /etc/centos-release

CentOS Linux release 7.3.1611 (Core)

[[email protected] ~]# uname -r

3.10.0-514.el7.x86_64

[[email protected] ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.200.200 master-1

192.168.200.201 master-2

192.168.200.202 master-3

192.168.200.203 node-1

2.查看minion系统信息:

[[email protected] ~]# hostname

master

[[email protected] ~]# cat /etc/centos-release

CentOS Linux release 7.5.1804 (Core)

[[email protected] ~]# uname -r

3.10.0-862.el7.x86_64

3.安装依赖包:

[[email protected] ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

4.设置阿里云镜像源

[[email protected] ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

5.安装 Docker-CE

[[email protected] ~]# yum install docker-ce -y

6.启动 Docker-CE

[[email protected] ~]# systemctl enable docker

[[email protected] ~]# systemctl start docker

1.2 安装 Kubeadm

- 安装 Kubeadm 首先我们要配置好阿里云的国内源,执行如下命令:

[[email protected] ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

EOF - 执行以下命令来重建 Yum 缓存

[[email protected] ~]# yum -y install epel-release

[[email protected] ~]# yum clean all

[[email protected] ~]# yum makecache - 安装 Kubeadm

[[email protected] ~]# yum -y install kubelet kubeadm kubectl kubernetes-cni - 启用 Kubeadm 服务

[[email protected] ~]# systemctl enable kubelet && systemctl start kubelet

1.3 配置 Kubeadm 所用到的镜像

[[email protected] ~]# vim k8s.sh

#!/bin/bash

images=(kube-proxy-amd64:v1.11.0 kube-scheduler-amd64:v1.11.0 kube-controller-manager-amd64:v1.11.0 kube-apiserver-amd64:v1.11.0

etcd-amd64:3.2.18 coredns:1.1.3 pause-amd64:3.1 kubernetes-dashboard-amd64:v1.8.3 k8s-dns-sidecar-amd64:1.14.9 k8s-dns-kube-dns-amd64:1.14.9

k8s-dns-dnsmasq-nanny-amd64:1.14.9 )

for imageName in $images[@] ; do

docker pull keveon/$imageName

docker tag keveon/$imageName k8s.gcr.io/$imageName

docker rmi keveon/$imageName

done

#个人新加的一句,V 1.11.0 必加

docker tag da86e6ba6ca1 k8s.gcr.io/pause:3.1

[[email protected] ~]# sh k8s.sh

1.4 关闭 Swap

[[email protected] ~]# swapoff -a

[[email protected] ~]# vi /etc/fstab

#

#/etc/fstab

#Created by anaconda on Sun May 27 06:47:13 2018

#

#Accessible filesystems, by reference, are maintained under ‘/dev/disk‘

#See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/cl-root / xfs defaults 0 0

UUID=07d1e156-eba8-452f-9340-49540b1c2bbb /boot xfs defaults 0 0

#/dev/mapper/cl-swap swap swap defaults 0 0

不关闭swap也是可以的,初始化时需要跳过swap错误,修改配置文件如下:

[[email protected] manifors]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false" #不关闭swap

KUBE_PROXY_MODE=ipvs #启用IPvs,不定义会降级Iptables

启用ipvs需要提前将模块安装好并启用

1.5 关闭 SELinux和防火墙

[[email protected] ~]# setenforce 0

[[email protected] ~]# systemctl stop firewalld

1.6 配置转发参数

[[email protected] ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

EOF

[[email protected] ~]# sysctl –system

注:上述部署基础环境所有节点包括node节点都要操作

部署三主高可用

master节点部署keepalived

2.1.所有的主部署keepalived的,node节点连接VIP,从而实现高可用

[[email protected] ~]# yum install -y keepalived

2.2.keepalived配置如下:(下面配置仅供参考)

[[email protected] ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs

notification_email br/>[email protected]

[email protected]br/>[email protected]

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_instance VI_1

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication

auth_type PASS

auth_pass 1111

virtual_ipaddress

192.168.200.16

2.3.启动keepalived,并测试:

[[email protected] ~]# systemctl start keepalived

[[email protected] ~]# ip a show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:29:85:5b brd ff:ff:ff:ff:ff:ff

inet 192.168.200.200/24 brd 192.168.200.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.200.16/32 scope global ens33 #VIP出现了

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe29:855b/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:48:16:c2:20 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

另外两台主也做同样的操作

2.4.Master-2的keepalived配置

[[email protected] ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs

notification_email br/>[email protected]

[email protected]br/>[email protected]

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_instance VI_1

state BACKUP

interface ens33

virtual_router_id 51

priority 90 #优先级低于主

advert_int 1

authentication

auth_type PASS

auth_pass 1111

virtual_ipaddress

192.168.200.16

2.5.Master-3的keepalived配置

[[email protected] ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs

notification_email br/>[email protected]

[email protected]br/>[email protected]

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_instance VI_1

state BACKUP

interface ens33

virtual_router_id 51

priority 80

advert_int 1

authentication

auth_type PASS

auth_pass 1111

virtual_ipaddress

192.168.200.16

2.6.测试keepalived

[[email protected] ~]# systemctl start keepalived

[[email protected] ~]# ip a show

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:0f:6b:3a brd ff:ff:ff:ff:ff:ff

inet 192.168.200.201/24 brd 192.168.200.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe0f:6b3a/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:e8:3a:d6:b1 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

[[email protected] ~]# systemctl start keepalived

[[email protected] ~]# ip a show

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:1a:fd:85 brd ff:ff:ff:ff:ff:ff

inet 192.168.200.202/24 brd 192.168.200.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe1a:fd85/64 scope link

valid_lft forever preferred_lft forever

会发现2-3都没VIP,现在停止master-1

[[email protected] ~]# systemctl stop keepalived

[[email protected] ~]# ip a show

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:29:85:5b brd ff:ff:ff:ff:ff:ff

inet 192.168.200.200/24 brd 192.168.200.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe29:855b/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:48:16:c2:20 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

master-1上没有VIP

[[email protected] ~]# ip a show

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:0f:6b:3a brd ff:ff:ff:ff:ff:ff

inet 192.168.200.201/24 brd 192.168.200.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.200.16/32 scope global ens33 #VIP来到master-2上了

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe0f:6b3a/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:e8:3a:d6:b1 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

[[email protected] ~]# ip a show #master-3依然没有

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:1a:fd:85 brd ff:ff:ff:ff:ff:ff

inet 192.168.200.202/24 brd 192.168.200.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe1a:fd85/64 scope link

valid_lft forever preferred_lft forever

接着关闭master-2的keepalived

[[email protected] ~]# systemctl stop keepalived

[[email protected] ~]# ip a show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:0f:6b:3a brd ff:ff:ff:ff:ff:ff

inet 192.168.200.201/24 brd 192.168.200.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe0f:6b3a/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:e8:3a:d6:b1 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

[[email protected] ~]# ip a show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:1a:fd:85 brd ff:ff:ff:ff:ff:ff

inet 192.168.200.202/24 brd 192.168.200.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.200.16/32 scope global ens33 #会发现VIP漂移到master-3上了

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe1a:fd85/64 scope link

valid_lft forever preferred_lft forever

有上述可以证明keepalived是正常工作的

注:由于本次实验都采取默认配置,keepalived的默认是抢占模式,所以当依次开启keepalived时,VIP最后又会在master-1上。

如果刚开始开启keepalived的时候会发现三台机器上都有VIP,不要慌,很有可能是你的防火墙或者selinux没有关闭。

2.7.配置kubelet

#配置kubelet使用国内阿里pause镜像,官方的镜像被墙,kubelet启动不了

[[email protected] ~]# cat > /etc/sysconfig/kubelet <<EOF

KUBELET_EXTRA_ARGS="--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"

EOF

#重新载入kubelet系统配置

[[email protected] ~]# systemctl daemon-reload

#设置开机启动,暂时不启动kubelet

[[email protected] ~]# systemctl enable kubelet

注:以上操作在所有节点上操作

配置第一个master节点:

3.1通过编写配置脚本,一键生成配置文件

[[email protected] ~]# vim kube.sh

#!/bin/bash

#设置节点环境变量,后续ip,hostname信息都以环境变量表示

#下面的IP根据各自的情况去配置

CP0_IP="192.168.200.200"

CP0_HOSTNAME="master-1"

CP1_IP="192.168.200.201"

CP1_HOSTNAME="master-2"

CP2_IP="192.168.200.202"

CP2_HOSTNAME="master-3"

ADVERTISE_VIP="192.168.200.16" #这里是VIP

生成kubeadm配置文件

cat > kubeadm-master.config <<EOF

apiVersion: kubeadm.k8s.io/v1alpha2

kind: MasterConfiguration

kubernetes版本

kubernetesVersion: v1.11.1

#使用国内阿里镜像

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

apiServerCertSANs:

- "$CP0_HOSTNAME"

- "$CP0_IP"

- "$ADVERTISE_VIP"

- "127.0.0.1"

api:

advertiseAddress: $CP0_IP

controlPlaneEndpoint: $ADVERTISE_VIP:6443

etcd:

local:

extraArgs:

listen-client-urls: "https://127.0.0.1:2379,https://$CP0_IP:2379"

advertise-client-urls: "https://$CP0_IP:2379"

listen-peer-urls: "https://$CP0_IP:2380"

initial-advertise-peer-urls: "https://$CP0_IP:2380"

initial-cluster: "$CP0_HOSTNAME=https://$CP0_IP:2380"

serverCertSANs:

- $CP0_HOSTNAME

- $CP0_IP

controllerManagerExtraArgs:

node-monitor-grace-period: 10s

pod-eviction-timeout: 10s

networking:

podSubnet: 10.244.0.0/16

kubeProxy:

config:

#mode: ipvs #这是k8s的模式,1.11版本以上是默认ipvs进行装发,如果系统开启了ipvs,可以打开注释。系统没有开始会降级采用Iptables,我这里没有开启,就启用Iptables了。

mode: iptables

EOF

3.2执行一下,生成配置文件

[[email protected] ~]# sh kube.sh

[[email protected] ~]# ls

anaconda-ks.cfg k8s.sh kubeadm-master.config kube.sh

3.3拉取配置文件中所需的镜像

[[email protected] ~]# kubeadm config images pull --config kubeadm-master.config

[endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.12.2

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.12.2

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.12.2

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.12.2

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd-amd64:3.2.18

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.1.3

3.4初始化

[[email protected] ~]# kubeadm init --config kubeadm-master.config --ignore-preflight-errors=‘SystemVerification‘

。。。。。。。。。。。。。。。。。。。。#忽略上面的输出信息

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.200.16:6443 --token g8hzyf.8185orcoq845489s --discovery-token-ca-cert-hash sha256:759e92bee1931f6e89deefd30c0d62ad4ec810bd40688e43ee7e1749ccd6c370

#红色部分是加入node节点时,需要的命令

注:×××部分可以不加,因为这里我docker的版本问题导致报错,所以通过这个选项可以忽略。

3.5 查看节点的状态

[[email protected] ~]# mkdir -p $HOME/.kube

[[email protected] ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[[email protected] ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-1 NotReady master 5m42s v1.11.1

#会发现只有一个master且状态为NotReady

3.6 安装网络插件

[[email protected] ~]# kubectl apply -f https://git.io/weave-kube-1.6

serviceaccount/weave-net created

clusterrole.rbac.authorization.k8s.io/weave-net created

clusterrolebinding.rbac.authorization.k8s.io/weave-net created

role.rbac.authorization.k8s.io/weave-net created

rolebinding.rbac.authorization.k8s.io/weave-net created

daemonset.extensions/weave-net created

3.7 查看节点状态

[[email protected] ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-1 Ready master 18m v1.11.1

#状态为ready了

3.8 上传这台master生成的相关证书到其他的master节点上

[[email protected] ~]# cd /etc/kubernetes

[[email protected] kubernetes]# tar cvzf k8s-key.tgz pki/ca. pki/sa. pki/front-proxy-ca. pki/etcd/ca.

[[email protected] kubernetes]# ls

admin.conf controller-manager.conf k8s-key.tgz kubelet.conf manifests pki scheduler.conf

[[email protected] kubernetes]# scp /etc/kubernetes/k8s-key.tgz 192.168.200.201:/etc/kubernetes

[[email protected] kubernetes]# scp /etc/kubernetes/k8s-key.tgz 192.168.200.202:/etc/kubernetes

配置第二个master节点

4,1 先将master-1上传的证书文件解压

[[email protected] ~]# cd /etc/kubernetes/

[[email protected] kubernetes]# ls

k8s-key.tgz manifests

[email protected] kubernetes]# tar xf k8s-key.tgz

[[email protected] kubernetes]# ls

k8s-key.tgz manifests pki

4.2 编写配置文件生成脚本

[email protected] kubernetes]# vim kube.sh

#!/bin/bash

#设置节点环境变量,后续ip,hostname信息都以环境变量表示

CP0_IP="192.168.200.200"

CP0_HOSTNAME="master-1"

CP1_IP="192.168.200.201"

CP1_HOSTNAME="master-2"

CP2_IP="192.168.200.202"

CP2_HOSTNAME="master-3"

ADVERTISE_VIP="192.168.200.16"

#生成kubeadm配置文件

cat > kubeadm-master.config <<EOF

apiVersion: kubeadm.k8s.io/v1alpha2

kind: MasterConfiguration

kubernetes版本

kubernetesVersion: v1.11.1

#使用国内阿里镜像

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

apiServerCertSANs:

- "$CP1_HOSTNAME"

- "$CP1_IP"

- "$ADVERTISE_VIP"

- "127.0.0.1"

api:

advertiseAddress: $CP1_IP

controlPlaneEndpoint: $ADVERTISE_VIP:6443

etcd:

local:

extraArgs:

listen-client-urls: "https://127.0.0.1:2379,https://$CP1_IP:2379"

advertise-client-urls: "https://$CP1_IP:2379"

listen-peer-urls: "https://$CP1_IP:2380"

initial-advertise-peer-urls: https://$CP1_IP:2380

initial-cluster: "$CP0_HOSTNAME=https://$CP0_IP:2380,$CP1_HOSTNAME=https://$CP1_IP:2380"

initial-cluster-state: existing

serverCertSANs:

- $CP1_HOSTNAME

- $CP1_IP

peerCertSANs: - $CP1_HOSTNAME

- $CP1_IP

controllerManagerExtraArgs:

node-monitor-grace-period: 10s

pod-eviction-timeout: 10s

networking:

podSubnet: 10.244.0.0/16

kubeProxy:

config:

mode: ipvs

mode: iptablesEOF

注:这个配置文件和master-1的还是有区别的,所以不要直接把master-1的拷贝过来

4.3 执行脚本生成文件

[[email protected] kubernetes]# sh kube.sh

[[email protected] kubernetes]# ls

k8s-key.tgz kubeadm-master.config kube.sh manifests pki

4.4 拉取配置文件所需的镜像

[[email protected] kubernetes]# kubeadm config images pull --config kubeadm-master.config

[endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.11.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.11.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.11.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.11.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd-amd64:3.2.18

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.1.3

4.5 生成证书

[[email protected] kubernetes]# kubeadm alpha phase certs all --config kubeadm-master.config

4.6 生成kubelet相关配置文件

[[email protected] kubernetes]# kubeadm alpha phase kubelet config write-to-disk --config kubeadm-master.config

[[email protected] kubernetes]# kubeadm alpha phase kubelet write-env-file --config kubeadm-master.config

[[email protected] kubernetes]# kubeadm alpha phase kubeconfig kubelet --config kubeadm-master.config

4.7 启动kubelet

[[email protected] kubernetes]# systemctl restart kubelet

4.8 部署 controlplane,即kube-apiserver, kube-controller-manager, kube-scheduler等各组件

[[email protected] kubernetes]# kubeadm alpha phase kubeconfig all --config kubeadm-master.config

4.9 设置kubectl 默认配置文件

[[email protected] kubernetes]# mkdir ~/.kube

[[email protected] kubernetes]# cp /etc/kubernetes/admin.conf ~/.kube/config

4.10 查看节点状态

[[email protected] kubernetes]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-1 Ready master 58m v1.11.1

master-2 Ready <none> 2m37s v1.11.1

4.11 将master-2添加到etcd到集群中

[[email protected] kubernetes]# kubectl exec -n kube-system etcd-master-1 -- etcdctl --ca-file /etc/kubernetes/pki/etcd/ca.crt --cert-file /etc/kubernetes/pki/etcd/peer.crt --key-file /etc/kubernetes/pki/etcd/peer.key --endpoints=https://192.168.200.200:2379 member add master-2 https://192.168.200.201:2380

Added member named master-2 with ID 25974124f9b03316 to cluster

ETCD_NAME="master-2"

ETCD_INITIAL_CLUSTER="master-2=https://192.168.200.201:2380,master-1=https://192.168.200.200:2380"

ETCD_INITIAL_CLUSTER_STATE="existing"

4.12 部署etcd静态pod

[[email protected] kubernetes]# kubeadm alpha phase etcd local --config kubeadm-master.config

4.13 查看ectd节点

[[email protected] kubernetes]# kubectl exec -n kube-system etcd-master-1 -- etcdctl --ca-file /etc/kubernetes/pki/etcd/ca.crt --cert-file /etc/kubernetes/pki/etcd/peer.crt --key-file /etc/kubernetes/pki/etcd/peer.key --endpoints=https://192.168.200.200:2379 member list

25974124f9b03316: name=master-2 peerURLs=https://192.168.200.201:2380 clientURLs=https://192.168.200.201:2379 isLeader=false

f1751f15f702dfc9: name=master-1 peerURLs=https://192.168.200.200:2380 clientURLs=https://192.168.200.200:2379 isLeader=true

4.14 部署controlplane静态pod文件,kubelet会自动启动各组件

[[email protected] kubernetes]# kubeadm alpha phase controlplane all --config kubeadm-master.config

[endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address

[controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

4.15 此时查看节点及pod运行情况

[[email protected] kubernetes]# kubectl get pods --all-namespaces -o wide |grep master-2

kube-system etcd-master-2 1/1 Running 0 3m20s 192.168.200.201 master-2 <none>

kube-system kube-apiserver-master-2 1/1 Running 0 91s 192.168.200.201 master-2 <none>

kube-system kube-controller-manager-master-2 1/1 Running 0 91s 192.168.200.201 master-2 <none>

kube-system kube-proxy-dn8rr 1/1 Running 0 9m57s 192.168.200.201 master-2 <none>

kube-system kube-scheduler-master-2 1/1 Running 0 91s 192.168.200.201 master-2 <none>

kube-system weave-net-tvr6n 2/2 Running 2 9m57s 192.168.200.201 master-2 <none>

4.16 标记为master节点

[[email protected] kubernetes]# kubeadm alpha phase mark-master --config kubeadm-master.config

[endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address

[markmaster] Marking the node master-2 as master by adding the label "node-role.kubernetes.io/master=‘‘"

[markmaster] Marking the node master-2 as master by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[[email protected] kubernetes]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-1 Ready master 69m v1.11.1

master-2 Ready master 13m v1.11.1

#master-2也正常运行了

部署第三个master节点

5.1 先解压master-1传过来的证书文件

[[email protected] ~]# cd /etc/kubernetes/

[[email protected] kubernetes]# ls

k8s-key.tgz manifests

[[email protected] kubernetes]# tar xf k8s-key.tgz

[[email protected] kubernetes]# ls

k8s-key.tgz manifests pki

5.2 编写配置文件脚本并生成配置文件

[[email protected] ~]# vim kube.sh

#!/bin/bash

#设置节点环境变量,后续ip,hostname信息都以环境变量表示

CP0_IP="192.168.200.200"

CP0_HOSTNAME="master-1"

CP1_IP="192.168.200.201"

CP1_HOSTNAME="master-2"

CP2_IP="192.168.200.202"

CP2_HOSTNAME="master-3"

ADVERTISE_VIP="192.168.200.16"

#生成kubeadm配置文件,与第一个master节点的区别除了修改ip外,主要是etcd增加节点的配置

cat > kubeadm-master.config <<EOF

apiVersion: kubeadm.k8s.io/v1alpha2

kind: MasterConfiguration

#kubernetes版本

kubernetesVersion: v1.11.1

#使用国内阿里镜像

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

apiServerCertSANs:

- "$CP2_HOSTNAME"

- "$CP2_IP"

- "$ADVERTISE_VIP"

- "127.0.0.1"

api:

advertiseAddress: $CP2_IP

controlPlaneEndpoint: $ADVERTISE_VIP:6443

etcd:

local:

extraArgs:

listen-client-urls: "https://127.0.0.1:2379,https://$CP2_IP:2379"

advertise-client-urls: "https://$CP2_IP:2379"

listen-peer-urls: "https://$CP2_IP:2380"

initial-advertise-peer-urls: "https://$CP2_IP:2380"

initial-cluster: "$CP0_HOSTNAME=https://$CP0_IP:2380,$CP1_HOSTNAME=https://$CP1_IP:2380,$CP2_HOSTNAME=https://$CP2_IP:2380"

initial-cluster-state: existing

serverCertSANs:

- $CP2_HOSTNAME

- $CP2_IP

peerCertSANs: - $CP2_HOSTNAME

- $CP2_IP

controllerManagerExtraArgs:

node-monitor-grace-period: 10s

pod-eviction-timeout: 10s

networking:

podSubnet: 10.244.0.0/16

kubeProxy:

config:

mode: ipvs

mode: iptablesEOF

[[email protected] kubernetes]# cd

[[email protected] ~]# vim kube.sh

[[email protected] ~]# sh kube.sh

[[email protected] ~]# ls

anaconda-ks.cfg k8s.sh kubeadm-master.config kube.sh

5.3 拉取镜像

[[email protected] ~]# kubeadm config images pull --config kubeadm-master.config

[endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.12.2

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.12.2

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.12.2

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.12.2

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd-amd64:3.2.18

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.1.3

5.4 生成证书和相关配置文件

[[email protected] ~]# kubeadm alpha phase certs all --config kubeadm-master.config

[[email protected] ~]# kubeadm alpha phase kubelet config write-to-disk --config kubeadm-master.config

[[email protected] ~]# kubeadm alpha phase kubelet write-env-file --config kubeadm-master.config

[[email protected] ~]# kubeadm alpha phase kubeconfig kubelet --config kubeadm-master.config

5.5 启动kubelet

[[email protected] ~]# systemctl restart kubelet

5.6 部署 controlplane,即kube-apiserver, kube-controller-manager, kube-scheduler等各组件

[[email protected] ~]# kubeadm alpha phase kubeconfig all --config kubeadm-master.config

5.7设置kubectl 默认配置文件

[[email protected] ~]# mkdir ~/.kube

[[email protected] ~]# cp /etc/kubernetes/admin.conf ~/.kube/config

5.8 将master-3加入到etcd集群中

[[email protected] ~]# kubectl exec -n kube-system etcd-master-1 -- etcdctl --ca-file /etc/kubernetes/pki/etcd/ca.crt --cert-file /etc/kubernetes/pki/etcd/peer.crt --key-file /etc/kubernetes/pki/etcd/peer.key --endpoints=https://192.168.200.200:2379 member add master-3 https://192.168.200.202:2380

Added member named master-3 with ID b757e394754001e8 to cluster

ETCD_NAME="master-3"

ETCD_INITIAL_CLUSTER="master-2=https://192.168.200.201:2380,master-3=https://192.168.200.202:2380,master-1=https://192.168.200.200:2380"

ETCD_INITIAL_CLUSTER_STATE="existing"

5.9 部署etcd静态pod

[[email protected] ~]# kubeadm alpha phase etcd local --config kubeadm-master.config

[endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

5.10 查看ectd节点

[[email protected] ~]# kubectl exec -n kube-system etcd-master-1 -- etcdctl --ca-file /etc/kubernetes/pki/etcd/ca.crt --cert-file /etc/kubernetes/pki/etcd/peer.crt --key-file /etc/kubernetes/pki/etcd/peer.key --endpoints=https://192.168.200.200:2379 member list

4e28e0057914c780: name=master-2 peerURLs=https://192.168.200.201:2380 clientURLs=https://192.168.200.201:2379 isLeader=false

b757e394754001e8[unstarted]: peerURLs=https://192.168.200.202:2380

f1751f15f702dfc9: name=master-1 peerURLs=https://192.168.200.200:2380 clientURLs=https://192.168.200.200:2379 isLeader=true

5.11 部署controlplane静态pod文件,kubelet会自动启动各组件

[[email protected] ~]# kubeadm alpha phase controlplane all --config kubeadm-master.config

[endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address

[controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

5.12 标记为master节点

[[email protected] ~]# kubeadm alpha phase mark-master --config kubeadm-master.config

[endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address

[markmaster] Marking the node master-3 as master by adding the label "node-role.kubernetes.io/master=‘‘"

[markmaster] Marking the node master-3 as master by adding the taints [node-role.kubernetes.io/master:NoSchedule]

5.13 查看各节点运行情况

[[email protected] ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-1 Ready master 81m v1.11.1

master-2 Ready master 19m v1.11.1

master-3 Ready master 6m58s v1.11.1

node节点的部署

6.1 node-1加入k8s集群

[[email protected] ~]# kubeadm join 192.168.200.16:6443 --token ub9tas.mien6pn08jm1nl1z --discovery-token-ca-cert-hash sha256:0606874e30ca04dc4e9d8c72e0ab1f3e9016e498742bf47500fb31fa702c2c3c --ignore-preflight-errors=‘SystemVerification‘

6.2 查看节点状态

[[email protected] kubernetes]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-1 Ready master 1h v1.11.1

master-2 Ready master 1h v1.11.1

master-3 Ready master 32m v1.11.1

node-1 Ready <none> 3m v1.11.1

6.3 创建一个测试nginx 的pod

[[email protected] ~]# kubectl run nginx-deploy --image=nginx:1.14-alpine --port=80 --replicas=1

deployment.apps/nginx-deploy created

[[email protected] ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deploy-5b595999-kqnsc 1/1 Running 0 1m

[[email protected] ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-deploy-5b595999-kqnsc 1/1 Running 0 1m 10.42.0.1 node-1

[[email protected] ~]# curl 10.42.0.1

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>;

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>;

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

通过测试可以发现master和node节点都可以正常工作

测试高可用性

7.1 测试master高可用性,关闭其中一台master节点

[[email protected] ~]# ip a show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:0f:6b:3a brd ff:ff:ff:ff:ff:ff

inet 192.168.200.201/24 brd 192.168.200.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.200.16/32 scope global ens33 #关闭master-1后,VIP飘到master-2上了

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe0f:6b3a/64 scope link

[[email protected] ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-1 NotReady master 3h v1.11.1 #master-1停止工作了

master-2 Ready master 3h v1.11.1

master-3 Ready master 39m v1.11.1

node-1 NotReady <none> 16m v1.11.1

[[email protected] ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deploy-5b595999-svgp7 1/1 Running 0 42s

[[email protected] ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-deploy-5b595999-svgp7 1/1 Running 0 50s 10.36.0.1 node-1

[[email protected] ~]# curl 10.36.0.1

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>;

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>;

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

通过测试可以发现在缺少master-1下整个集群还是可以正常工作的

7.2 测试将nginx端口对外暴露

[[email protected] ~]# kubectl expose deployment nginx-deploy --name nginx --port=80 --target-port=80 --protocol=TCP

service/nginx exposed

[[email protected] ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h

nginx ClusterIP 10.106.146.49 <none> 80/TCP 10s

[email protected] ~]# curl 10.106.146.49

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>;

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>;

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

至此kubeadm部署的k8s高可用集群部署成功

注:etcd集群至少需要三个master才可以,两个无法做到高可用,三台只可以宕掉一台,五台允许宕两台,这是需要注意的地方。

redis的高可用实现方案:哨兵与集群(代码片段)

文章目录高可用什么是高可用?那么如何实现高可用?Redis中的高可用实现方案哨兵:概念:哨兵节点的特性工作原理:超半数选举工作原理:Redis主从复制主从复制概念主从复制的作用主从复制流程:... 查看详情

mysql的高可用(mha)(代码片段)

...用(MHA)MHA简介MHA:MasterHighAvailability,对主节点进行监控,可实现自动故障转移至其他从节点;通过提升某一从节点为新的主节点,基于主从复制实现,还需要客户端配合实现,目前MHA主要支持一主二从,即一台充当master,一台充当... 查看详情

mysql的高可用(mha)(代码片段)

...用(MHA)MHA简介MHA:MasterHighAvailability,对主节点进行监控,可实现自动故障转移至其他从节点;通过提升某一从节点为新的主节点,基于主从复制实现,还需要客户端配合实现,目前MHA主要支持一主二从,即一台充当master,一台充当... 查看详情

nginx+keepalived+tomcat实现的高可用(代码片段)

环境准备 172.16.119.100:nginx+keepalived master 172.16.119.101:nginx+keepalived backup 172.16.119.102:tomcat 172.16.119.103:tomcat 虚拟ip(VIP):172.16.119.200,对外提供服务的i 查看详情

kubeadm1.13安装(代码片段)

前言kubeadm快速安装kubernetes集群,kubeadm主要功能已经GA,除了高可用还在alpha。功能如下图AreaMaturityLevelCommandlineUXGAImplementationGAConfigfileAPIbetaCoreDNSGAkubeadmalphasubcommandsalphaHighavailabilityalphaDynamicKubeletConfigalphaSelf-hostingalpha当前我们线... 查看详情

mha实现mariadb的高可用的详细步骤及配置参数详解(代码片段)

MHA实现mariadb的高可用的详细步骤及配置参数详解 A.实验环境说明 a)4台centos7主机 b)角色说明:a、MHA:192.168.36.35b、Master_mariadb:192.168.36.121c、Slave_mariadb:192.168.36.120d、Slave_mariadb:192.168.36.27 B.安装程序包&nbs 查看详情

nginx负载均衡nginx的高可用集群利用keepalive实现双vip(代码片段)

...和高可用的区别?三、keepalived的vrrp协议四、keepalived实现高可用的实验五、高可用keepalive的双vip的实现一、什么是高可用HA?高可用HA(HighAvailability)是分布式系统架构设计中必须考虑的因素之一,它通常是指... 查看详情

ansible实现主备模式的高可用(keepalived)(代码片段)

...了众多运维工具(puppet、cfengine、chef、func、fabric)的优点,实现了批量系统配置、批量程序部署、批量运行命令等功能。Ansible是基于模块工作的,本身没有批量部署的能力,真正具有批量部署的是Ansible所运行的模块,Ansible只是提... 查看详情

006eureka的高可用(代码片段)

...ureka的服务器端注册到另外的eureka的服务器端,这样就可以实现微服务信息的复制,那么我们的一个服务端瘫痪,不至于影响到其 查看详情

kubeadm部署高可用k8s集群(v1.14.0)(代码片段)

一、集群规划主机名IP角色主要插件VIP172.16.1.10实现master高可用和负载均衡k8s-master01172.16.1.11masterkube-apiserver、kube-controller、kube-scheduler、kubelet、kube-proxy、kube-flannel、etcdk8s-master02172.16.1.12masterkube-apiserve 查看详情

eureka的高可用(代码片段)

...心因为某一台注册中心出现故障,而影响到整个服务.也就实现了注册中心的高可用。分布式和集群这两个概念:分布式:一个业务分拆多个子业务,部署在不同的服务器上集群:同一个业务,分别部署在不同的服务器上搭建的步 查看详情

keepalived实现高可用(代码片段)

一.keepalived是什么keepalived最初是为LVS负载均衡设计的,用于监控LVS集群系统中的各个节点的服务状态。后来又加入了基于VRRP的高可用功能,所以也可以作为nginx,mysql等服务的高可用解决方案使用。VRRP(VirtualRouterRedundancyProtocol)即... 查看详情

configserver高可用(代码片段)

...库的高可用由于配置的内容都存储在Git仓库中,所以要想实现Config Server的高可用,必须有一个高可用的Git仓库。有两种方式可以实现Git仓库的高可用。1 使用第三方的Git仓库:这种方式非常简单,可使用例如Github、BitBucke... 查看详情

keepalived+lvs实现lnmp网站的高可用部署(代码片段)

项目需求 当我们访问某个网站的时候可以在浏览器中输入IP或者域名链接到WebServer进行访问,如果这个WebServer挂了,那么整个系统都无法使用,用户也就不能进行正常的访问,这种情况将对公司产生一定的影响。这就是... 查看详情

kubeadm高可用master节点部署文档

kubeadm的标准部署里,etcd和master都是单节点的。但上生产,至少得高可用。etcd的高可用,用kubeadm微微扩散一下就可以。但master却官方没有提及。于是搜索了几篇文档,过几天测试一下。=======================http://www.cnblogs.com/caiwenhao/... 查看详情

redis的高可用实现方案:哨兵与集群(代码片段)

文章目录高可用什么是高可用?那么如何实现高可用?Redis中的高可用实现方案哨兵:概念:哨兵节点的特性工作原理:超半数选举工作原理:Redis主从复制主从复制概念主从复制的作用主从复制流程:... 查看详情

kubeadm安装高可用k8s集群(代码片段)

kubeadm安装高可用k8s集群高可用集群规划图主机规划环境搭建前言环境初始化关闭防火墙并禁止防火墙开机启动设置主机名主机名解析时间同步关闭selinux关闭swap分区将桥接的IPv4流量传递到iptables的链开启ipvs所有节点配置limit在k8s... 查看详情

《nginx》四nginx的负载均衡+keepalived实现nginx的高可用(代码片段)

nginx在系统中的作用是给系统提供一些负载均衡和动态代理的作用。通过不同轮询到不同服务,即使一台服务器挂掉,也能将请求转发到其他服务器上。但如果nginx挂掉,那此时整个系统就没有入口了,所以,... 查看详情