关键词:

环境说明:# 操作系统:centos7

# docker版本:19.03.5

# rancher版本: latest

# rancher server 节点IP :192.168.2.175

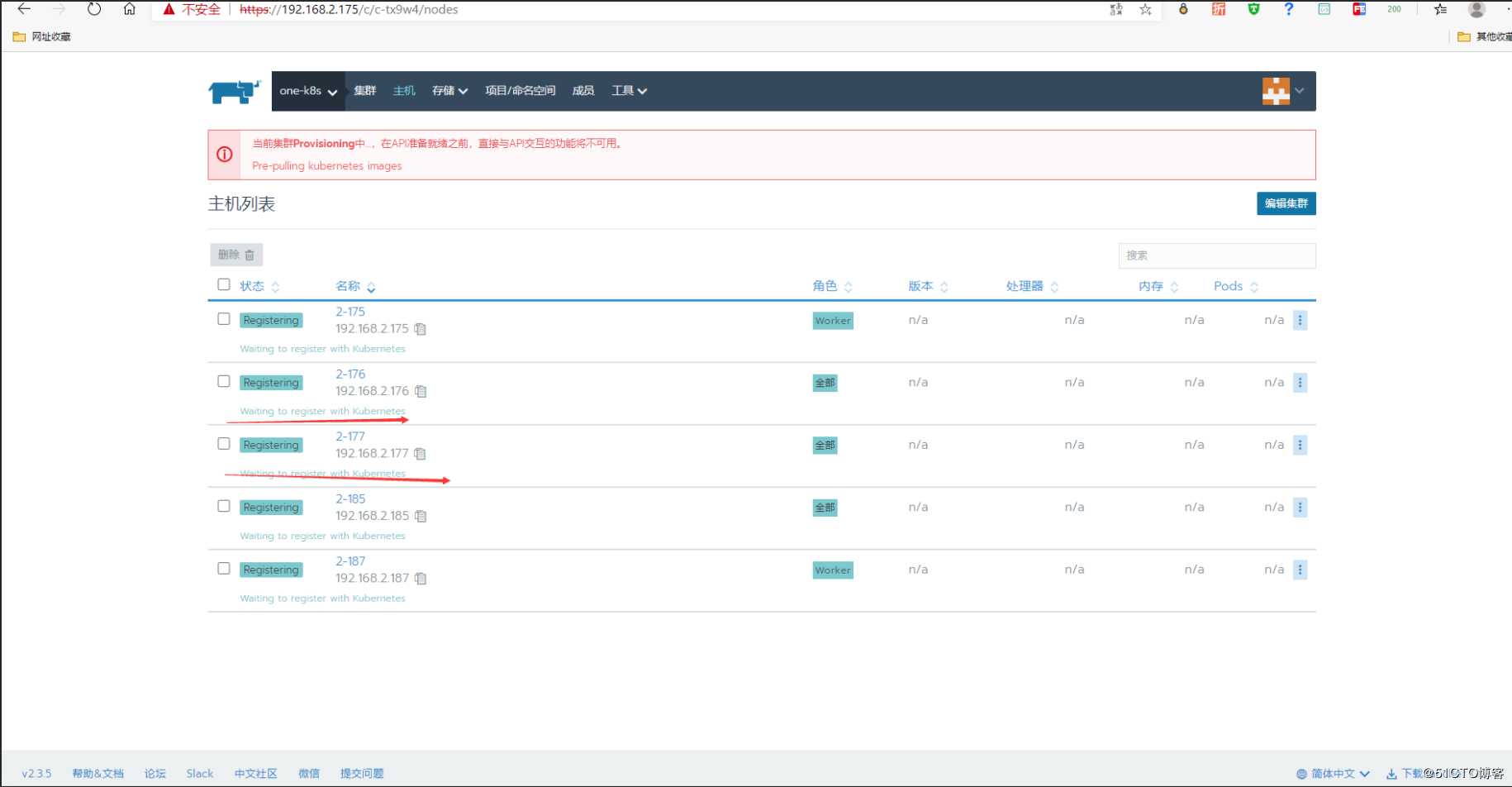

# rancher agent节点IP: 192.168.2.175,192.168.2.176,192.168.2.177,192.168.2.185,192.168.2.187

# K8S master 节点IP:192.168.2.176,192.168.2.177,192.168.2.185

# K8S worker节点IP: 192.168.2.175,192.168.2.176,192.168.2.177,192.168.2.185,192.168.2.187

# K8S etcd 节点IP:192.168.2.176,192.168.2.177,192.168.2.185部署准备:

# 操作在所有节点进行

# 修改内核参数:

关闭swap

vim /etc/sysctl.conf

vm.swappiness=0

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

sysctl -p

临时生效

swapoff -a && sysctl -w vm.swappiness=0

# 修改 fstab 不在挂载 swap

vi /etc/fstab

# /dev/mapper/centos-swap swap swap defaults 0 0

# 安装docker

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 添加docker配置

mkdir -p /etc/docker

vim /etc/docker/daemon.json

"max-concurrent-downloads": 20,

"data-root": "/apps/docker/data",

"exec-root": "/apps/docker/root",

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn"],

"log-driver": "json-file",

"bridge": "docker0",

"oom-score-adjust": -1000,

"debug": false,

"log-opts":

"max-size": "100M",

"max-file": "10"

,

"default-ulimits":

"nofile":

"Name": "nofile",

"Hard": 1024000,

"Soft": 1024000

,

"nproc":

"Name": "nproc",

"Hard": 1024000,

"Soft": 1024000

,

"core":

"Name": "core",

"Hard": -1,

"Soft": -1

# 安装依赖

yum install -y yum-utils ipvsadm telnet wget net-tools conntrack ipset jq iptables curl sysstat libseccomp socat nfs-utils fuse fuse-devel

# 安装docker依赖

yum install -y python-pip python-devel yum-utils device-mapper-persistent-data lvm2

# 安装docker

yum install -y docker-ce

# reload service 配置

systemctl daemon-reload

# 重启docker

systemctl restart docker

# 设置开机启动

systemctl enable docker部署rancher server

# 操作IP: 192.168.2.175

docker run -d --restart=unless-stopped -p 80:80 -p 443:443 rancher/rancher:latest

# 等待镜像拉取完成启动好容器

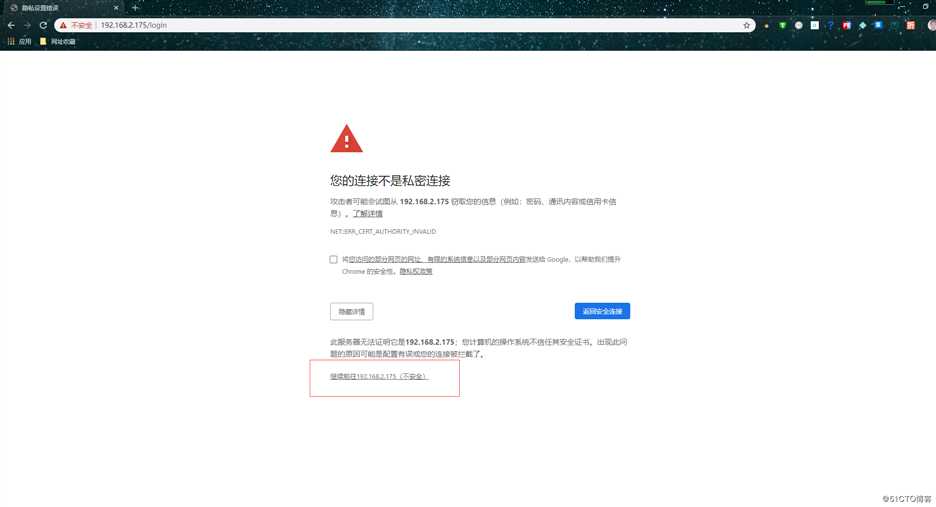

打开浏览器输入:192.168.2.175 浏览器会自动跳转到https选择 继续前往192.168.2.175(不安全)

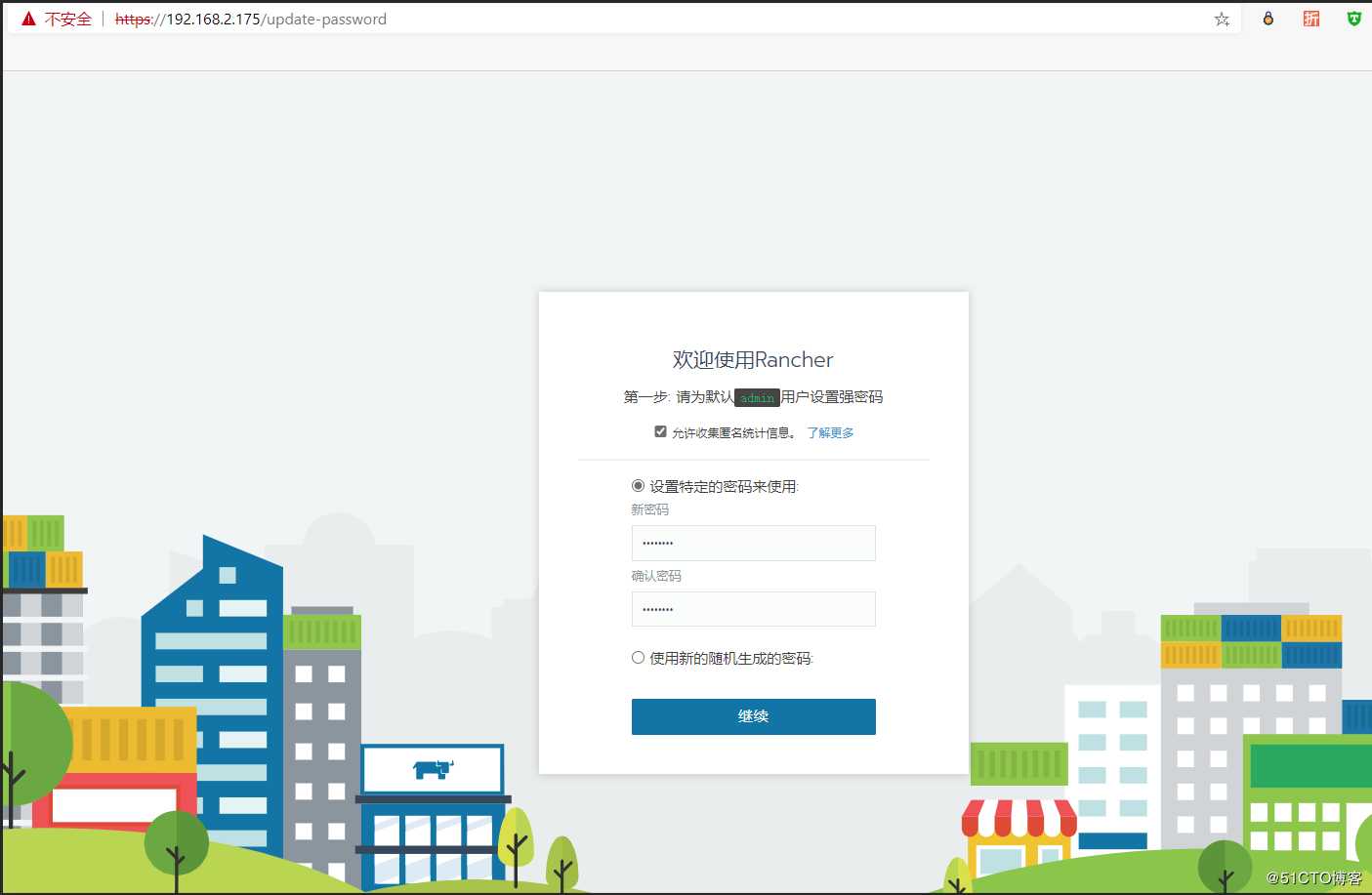

设置 rancher server

设置好密码单击继续

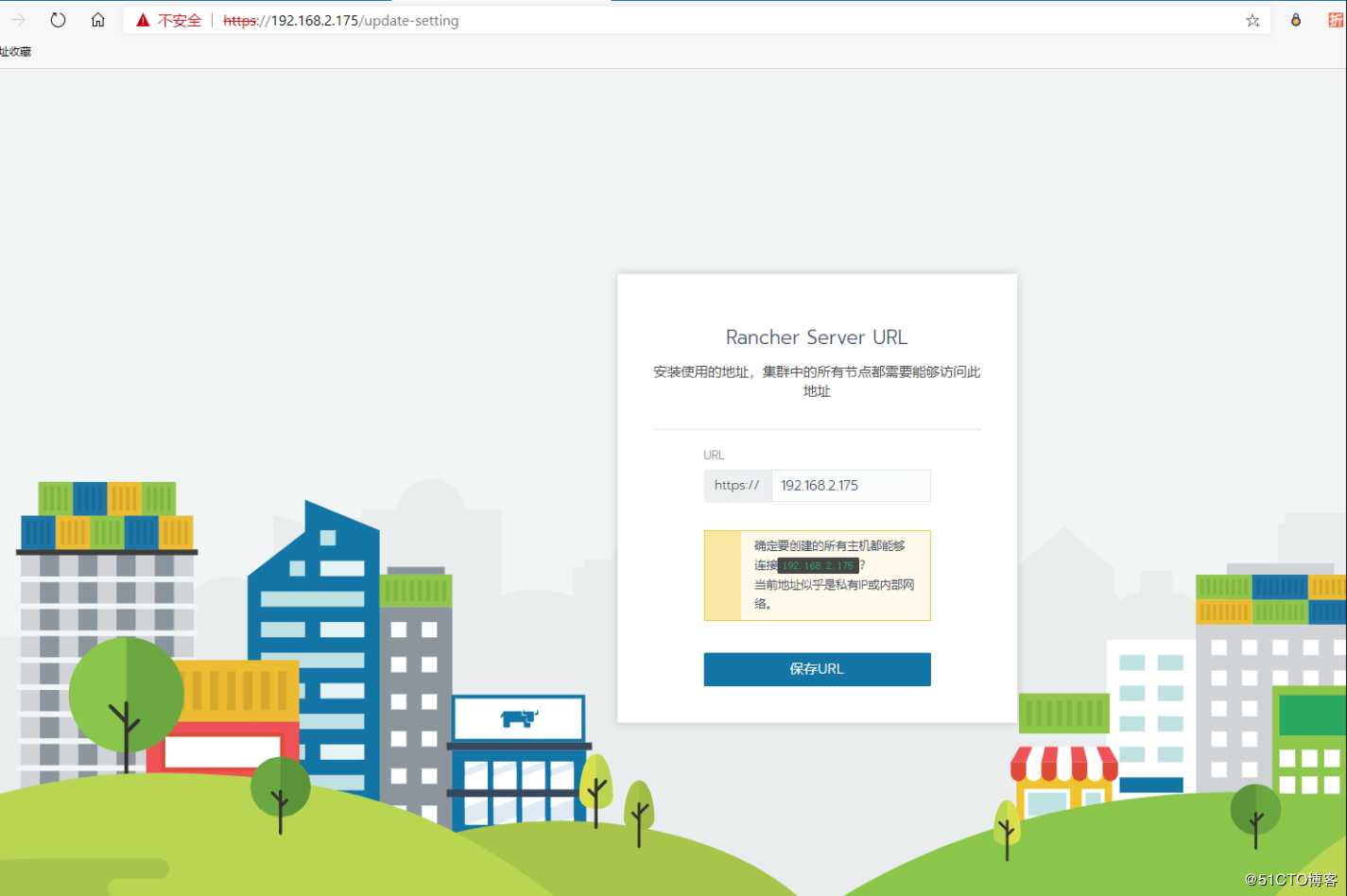

单击保存url

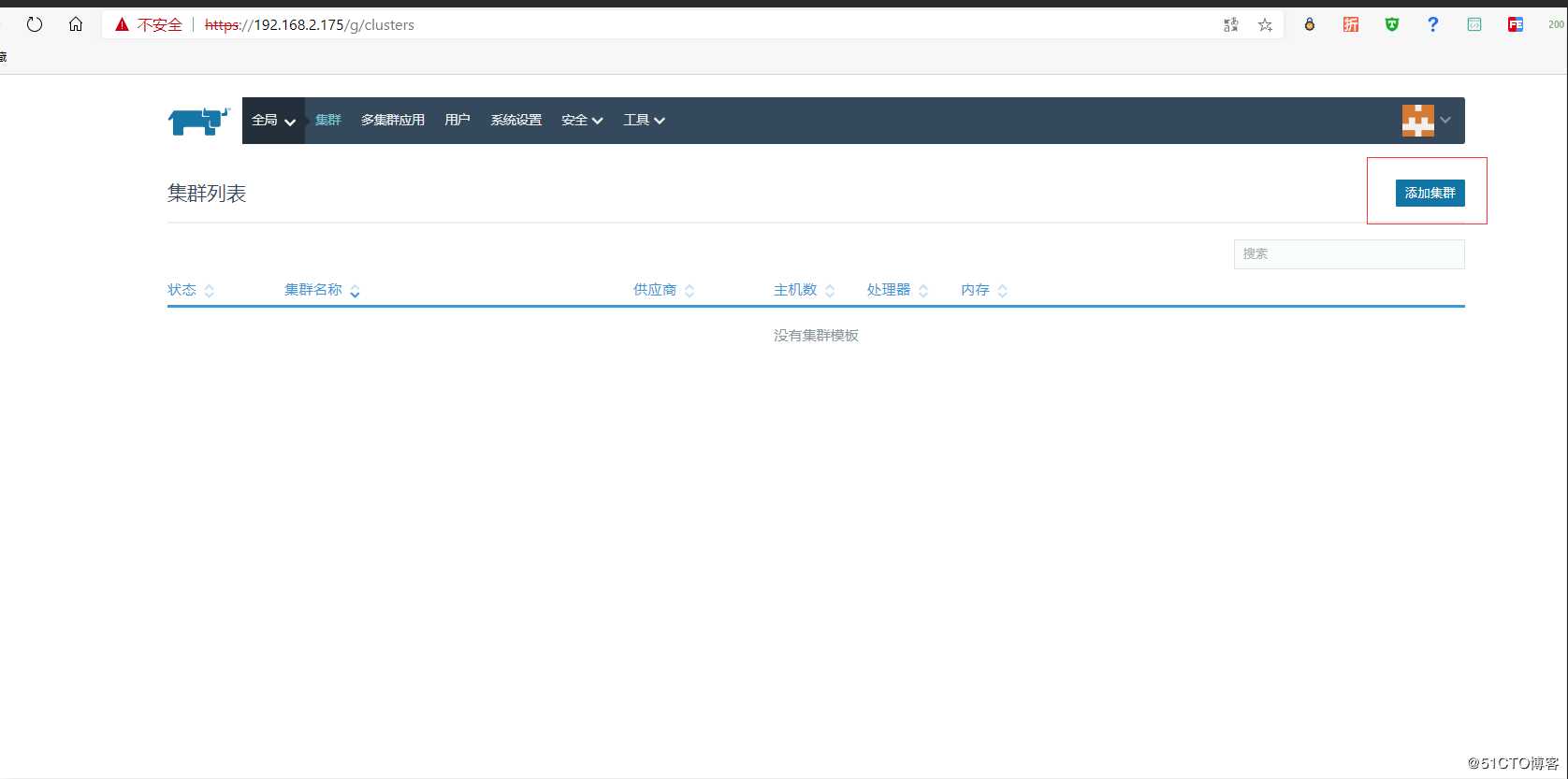

部署K8S 集群

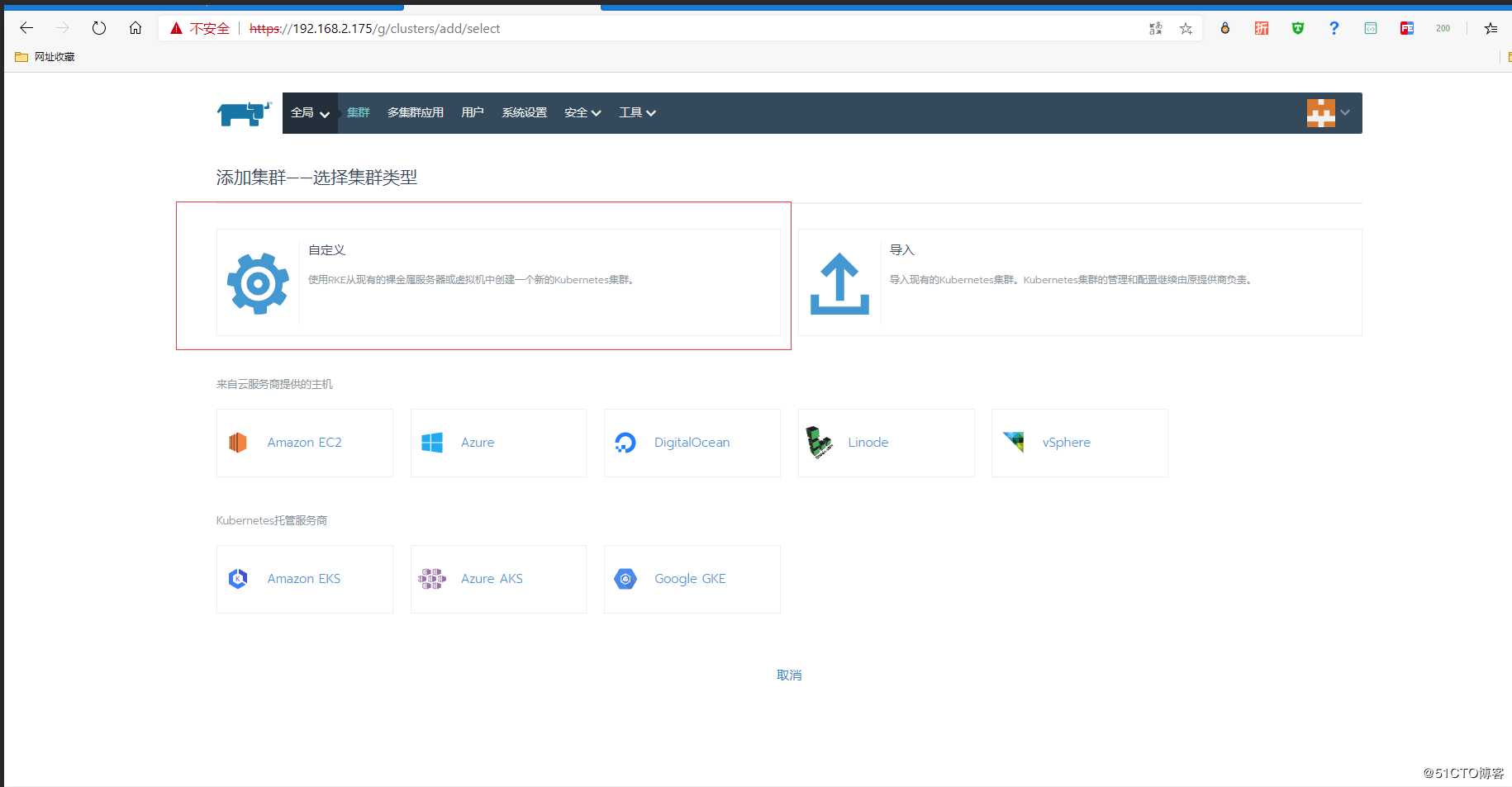

单击全局 选择添加集群

选择自定义

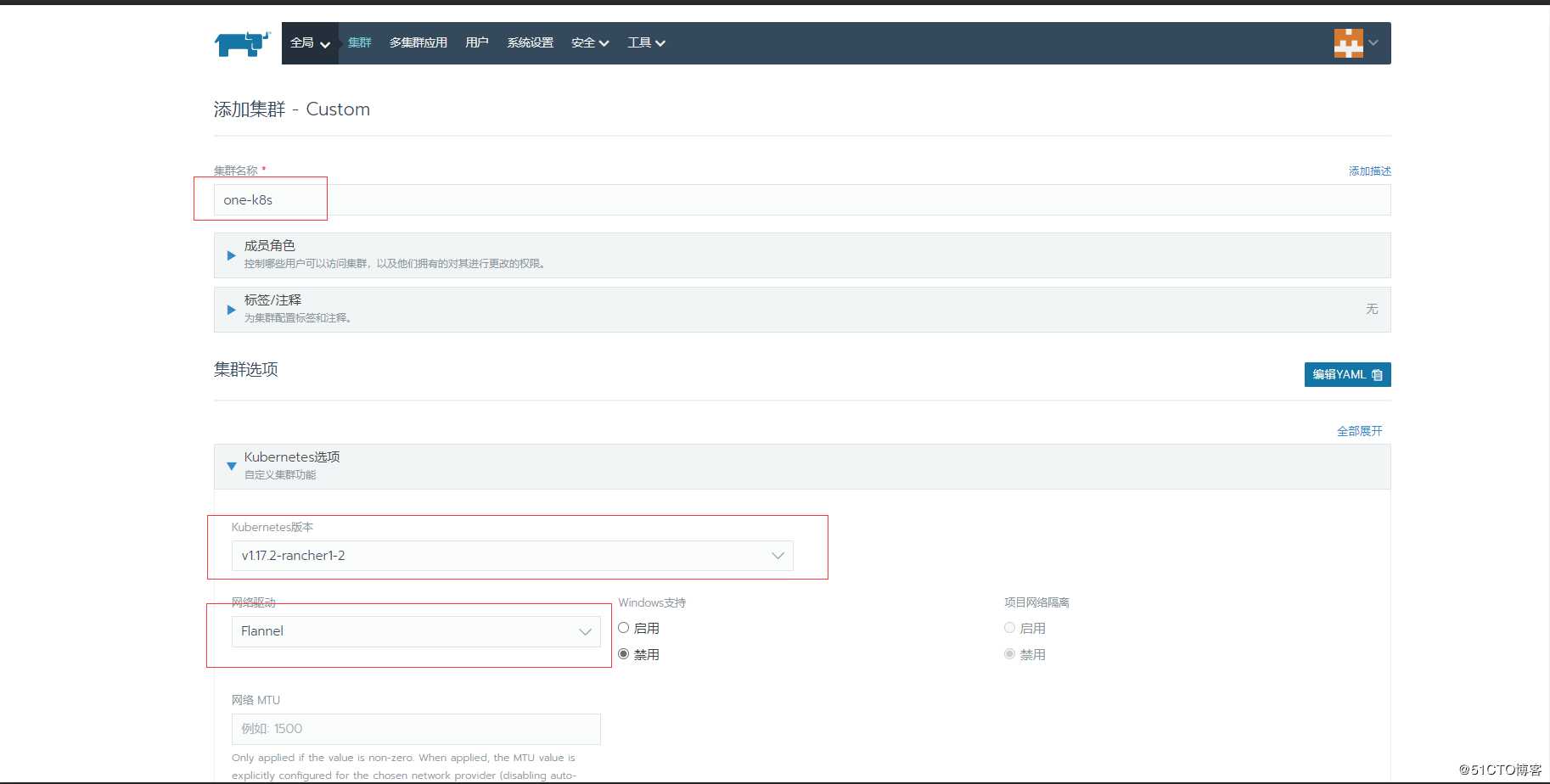

根据自己需求配置集群名集群参数

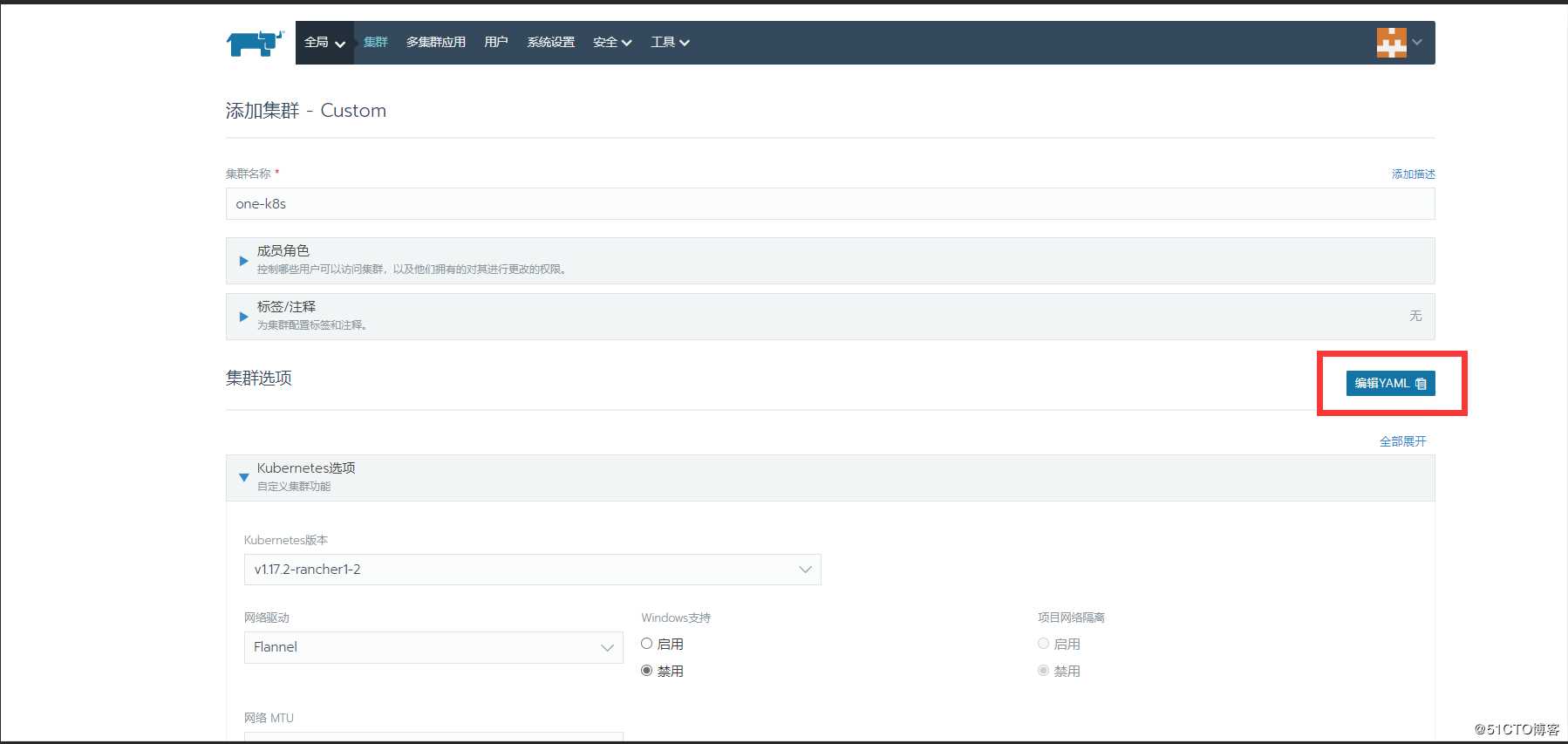

选择编辑yaml 可以配置一些集群参数

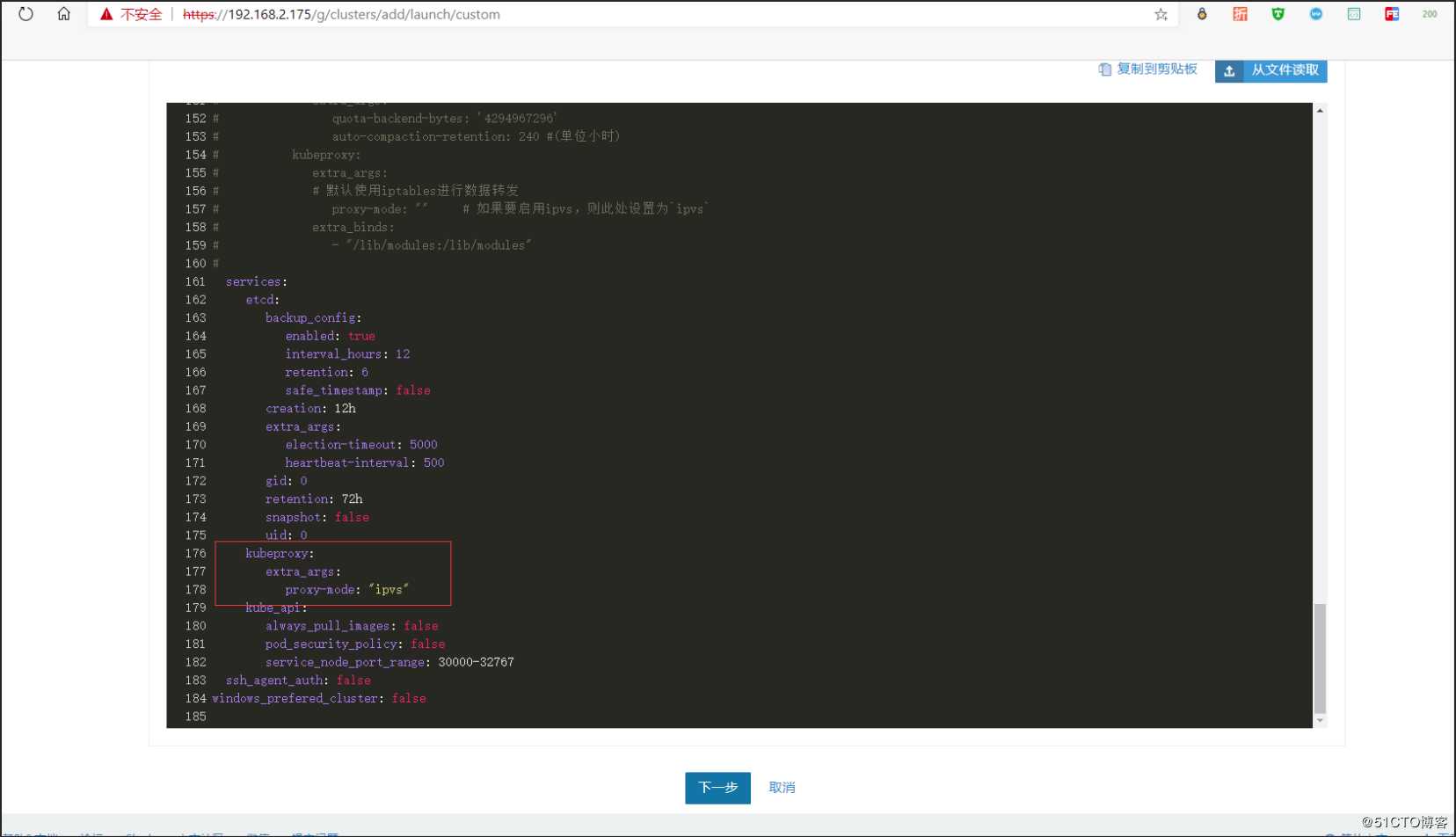

配置 kube-proxy 数据转发模式 这里我修改为IPVS 模式当然如果默认是iptables 配置完成选择下一步

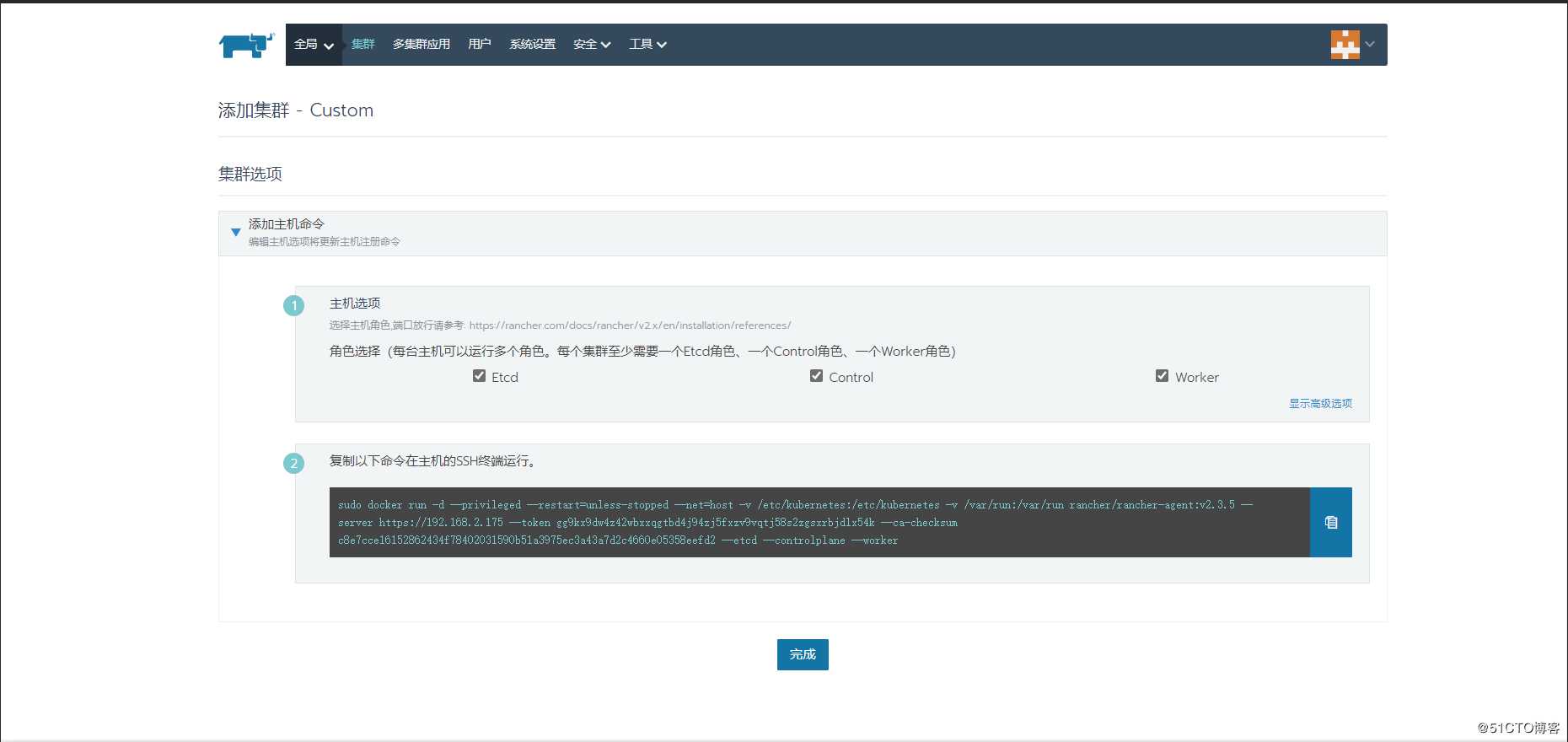

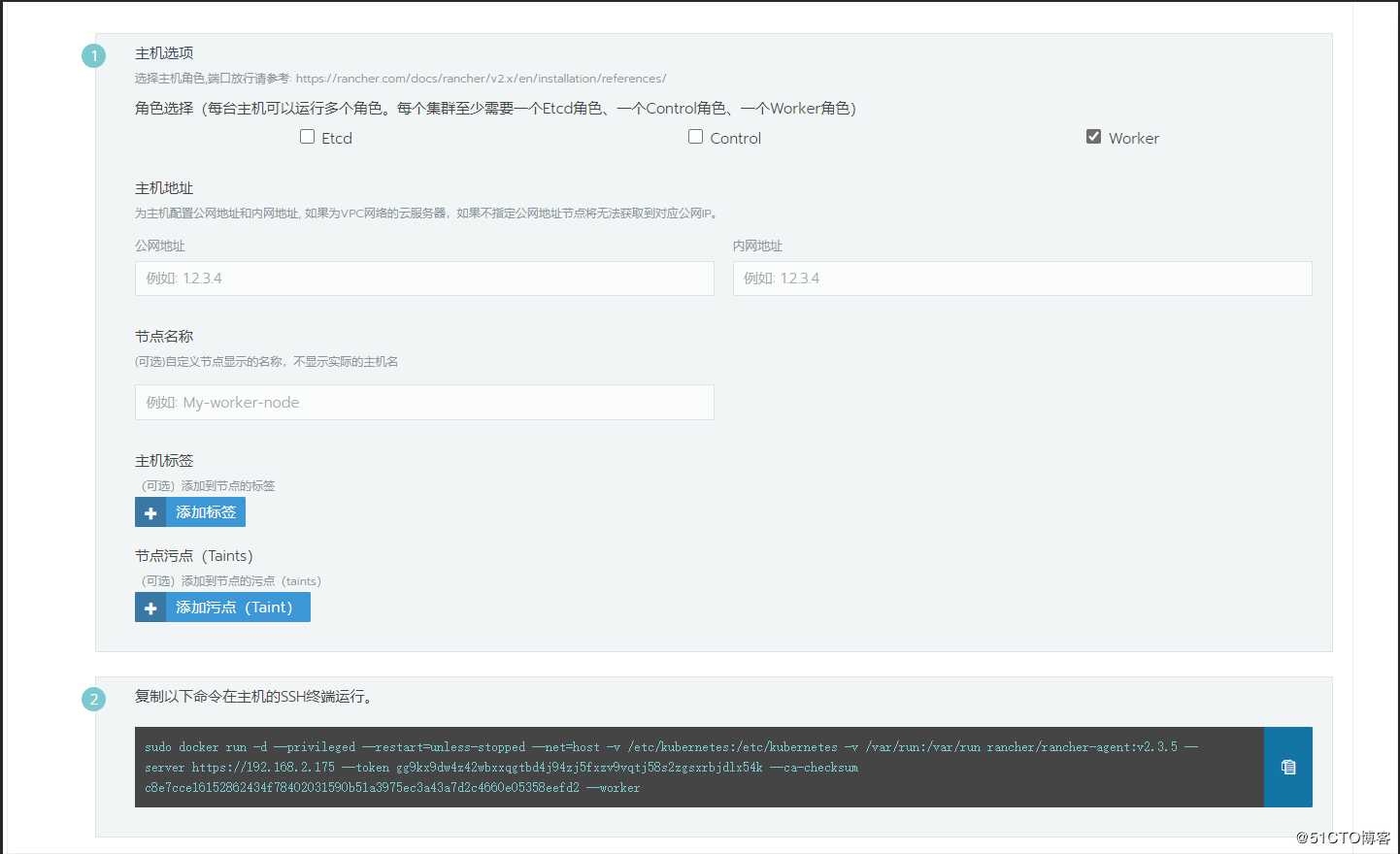

启动 rancher agent

# 在192.168.2.176,192.168.2.177,192.168.2.185 节点运行

docker run -d --privileged --restart=unless-stopped --net=host -v /etc/kubernetes:/etc/kubernetes -v /var/run:/var/run rancher/rancher-agent:v2.3.5 --server https://192.168.2.175 --token 8ps9xfssqz6vxbd6ltclztfvfk2k7794vnj42gpg94kjd82782jjlv --ca-checksum c8e7cce16152862434f78402031590b51a3975ec3a43a7d2c4660e05358eefd2 --etcd --controlplane --worker

# 在 192.168.2.175,192.168.2.187 运行

docker run -d --privileged --restart=unless-stopped --net=host -v /etc/kubernetes:/etc/kubernetes -v /var/run:/var/run rancher/rancher-agent:v2.3.5 --server https://192.168.2.175 --token 8ps9xfssqz6vxbd6ltclztfvfk2k7794vnj42gpg94kjd82782jjlv --ca-checksum c8e7cce16152862434f78402031590b51a3975ec3a43a7d2c4660e05358eefd2 --worker

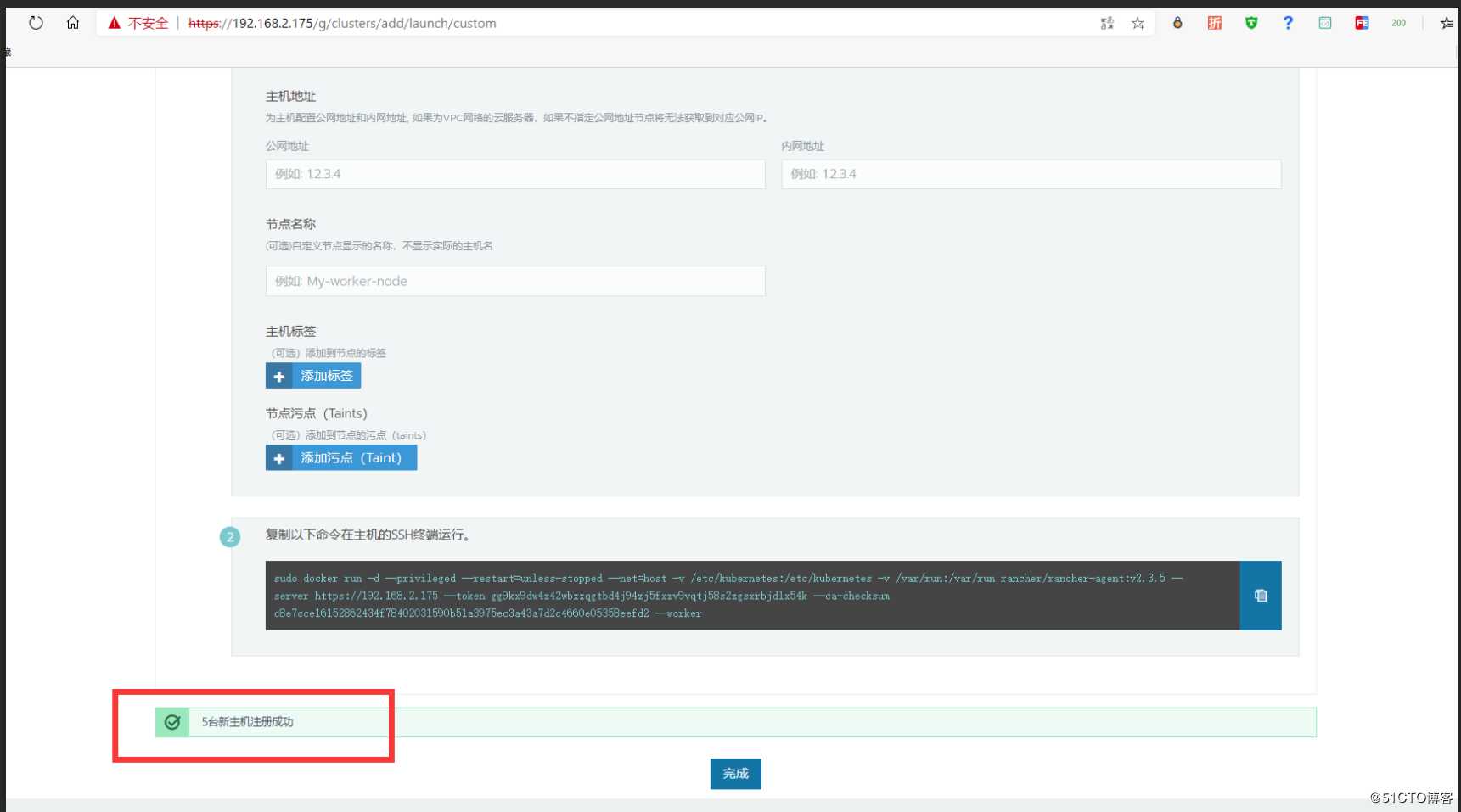

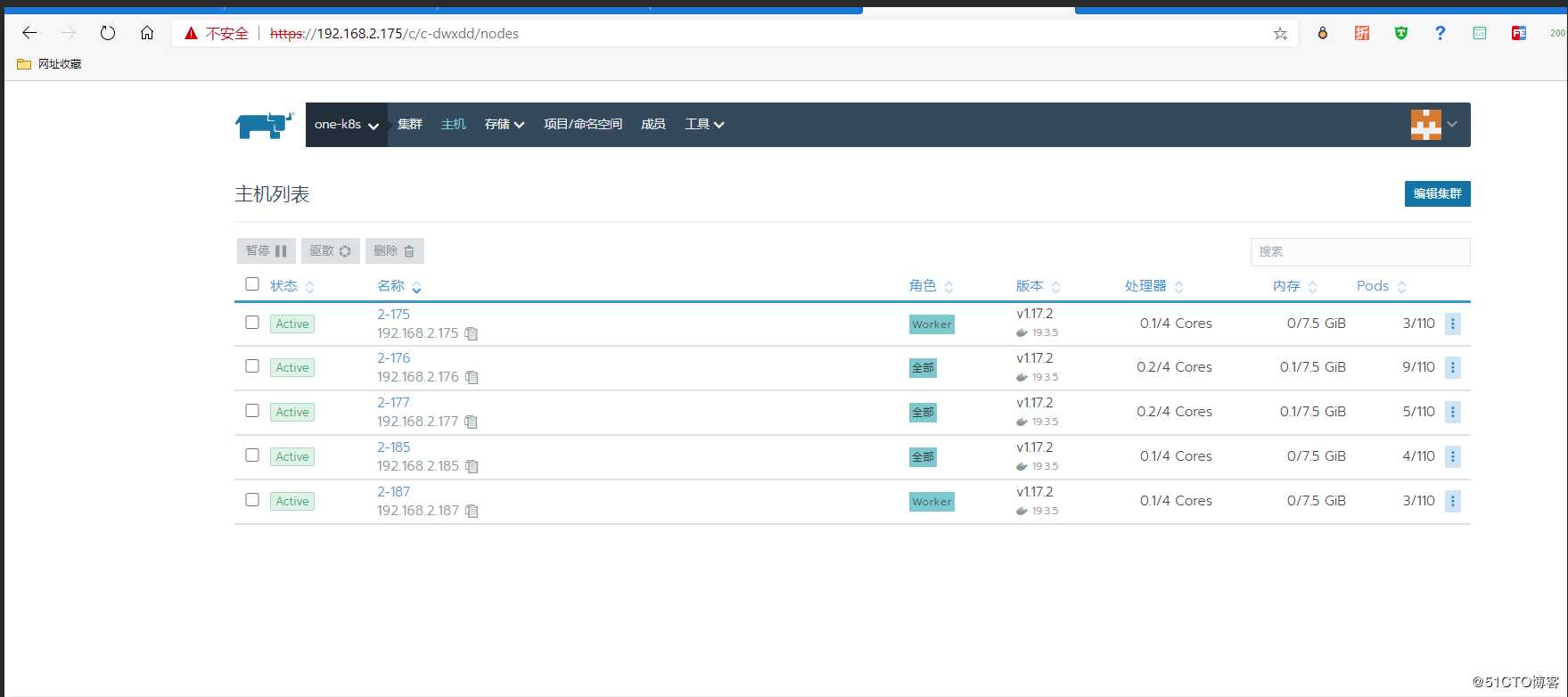

所有节点添加完成 选择完成按钮

等待集群部署完成

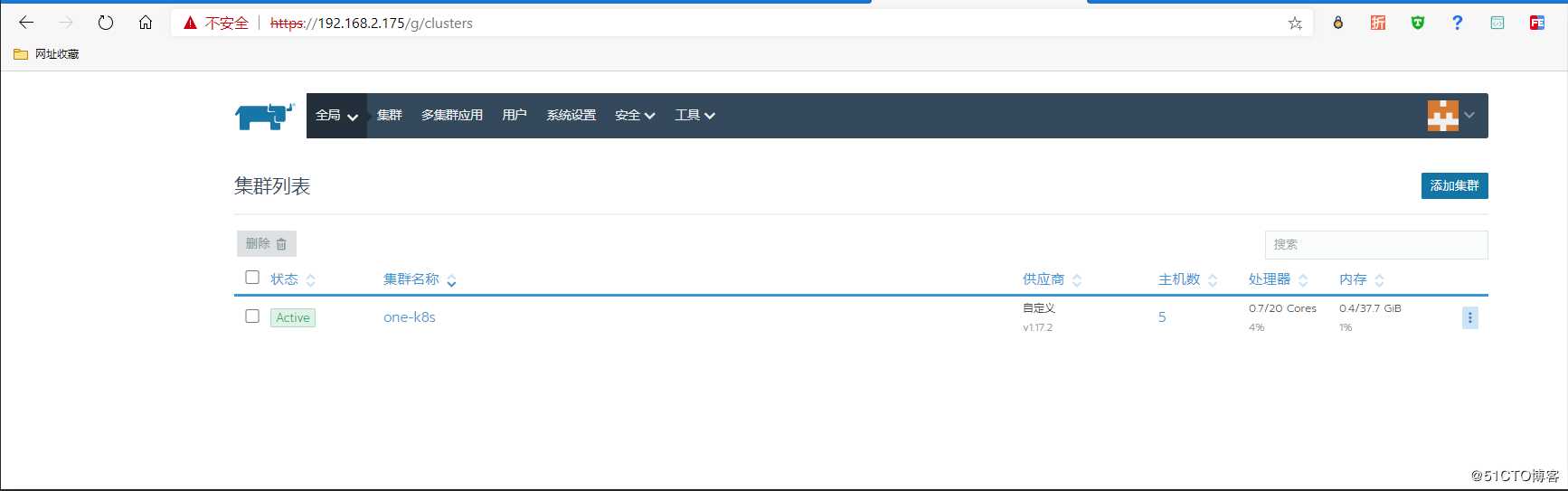

集群已经正常

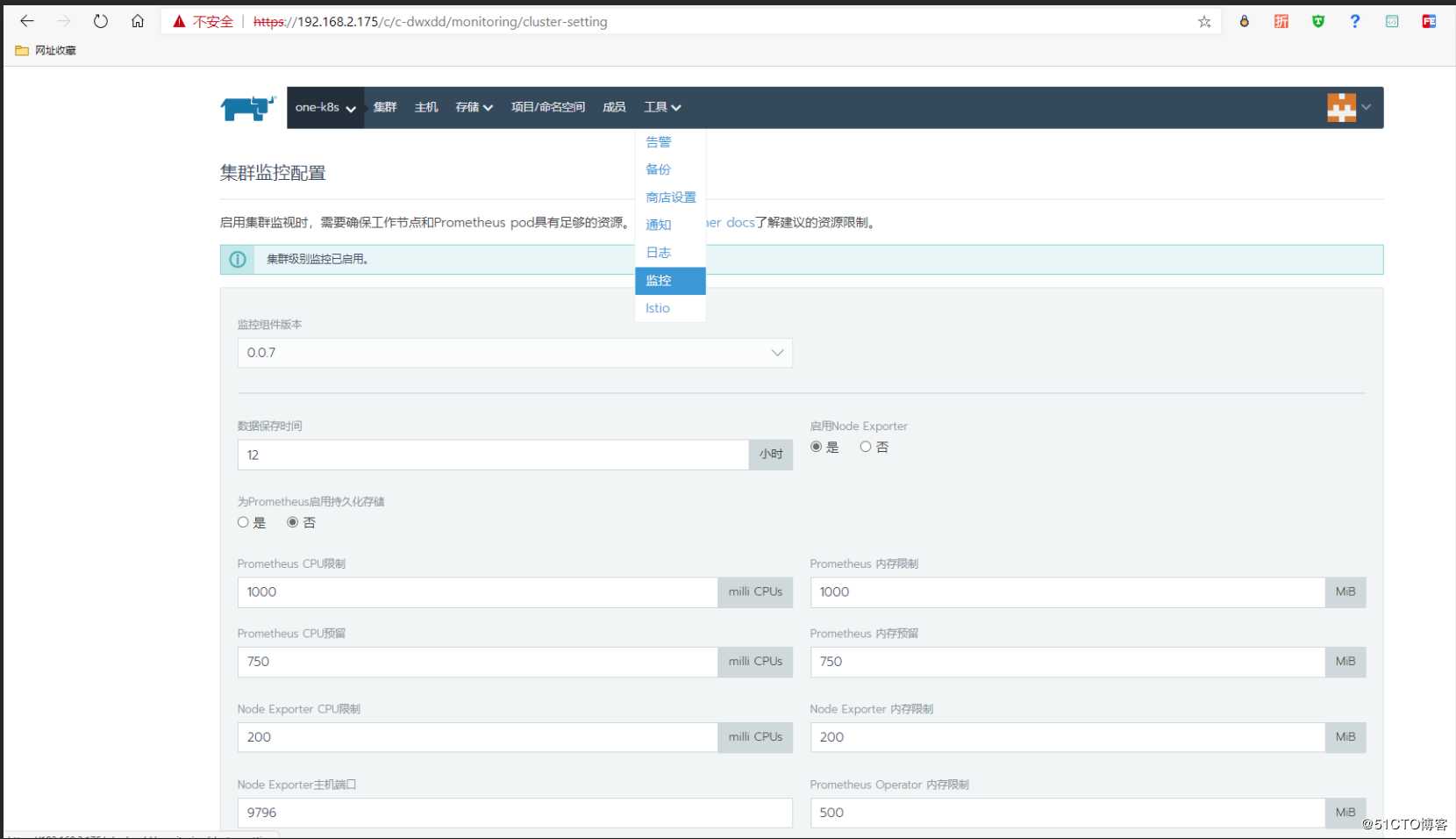

部署监控

部署监控前

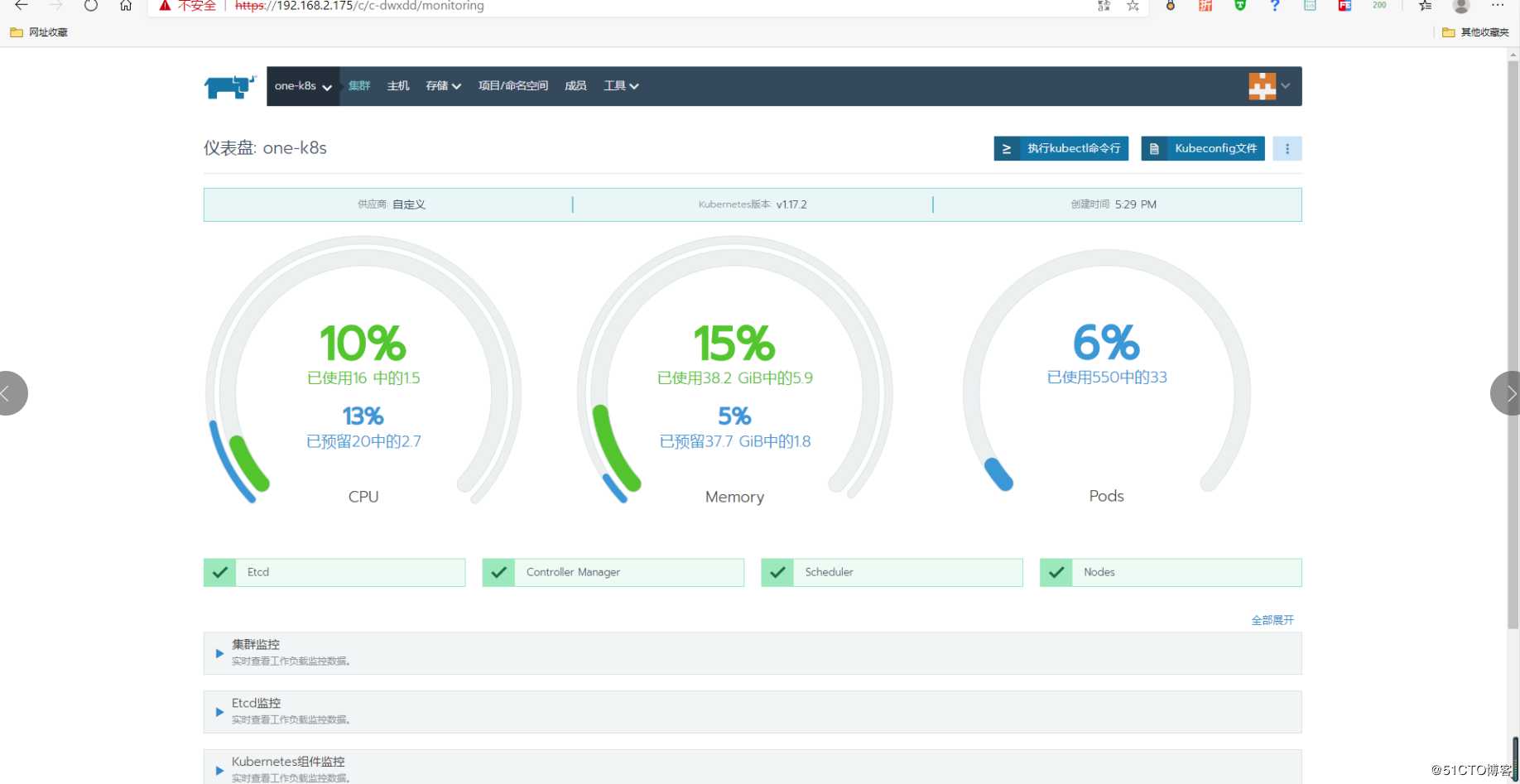

监控部署完成

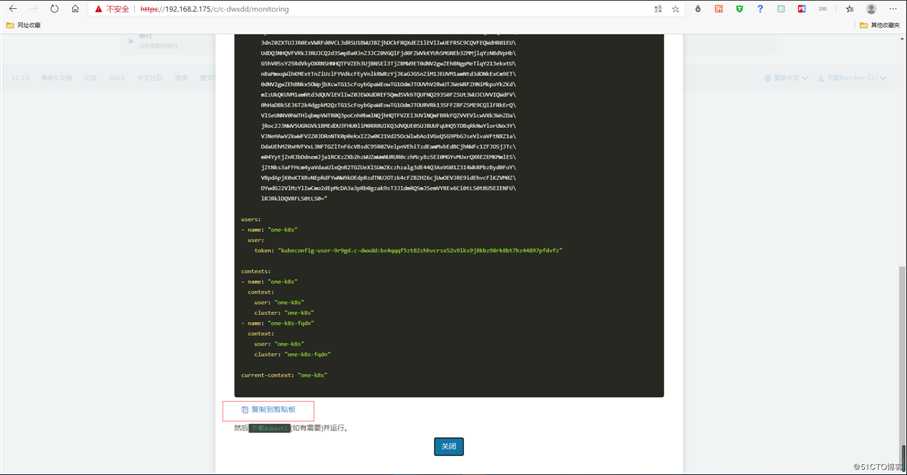

远程操作K8S 集群配置

选择 kubeconfig 单击打开

选择复制到剪贴板然后选择关闭

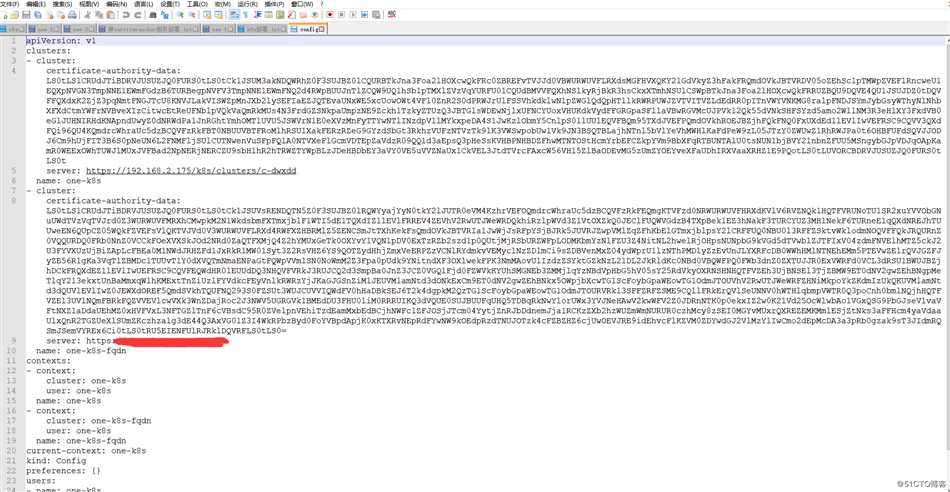

打开文本编辑器我使用的Notepad++

另存为 config 文件

kubectl 使用 config

# win10 on linux

# 文件路径根据自己配置文件修改 你也可以放到默认路径 ~/.kube/config 这样就不用指定kubeconfig kubectl 默认使用

export kubeconfig=/mnt/g/work/rke/config

# 查看启动所有POD

kubectl --kubeconfig=$kubeconfig get pod -A -o wide

root@Qist:~# kubectl --kubeconfig=$kubeconfig get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cattle-prometheus exporter-kube-state-cluster-monitoring-6db64f7d4b-hf6gp 1/1 Running 0 125m 10.42.3.4 2-187 <none> <none>

cattle-prometheus exporter-node-cluster-monitoring-b9q4k 1/1 Running 0 125m 192.168.2.175 2-175 <none> <none>

cattle-prometheus exporter-node-cluster-monitoring-d7v9w 1/1 Running 0 125m 192.168.2.187 2-187 <none> <none>

cattle-prometheus exporter-node-cluster-monitoring-f686h 1/1 Running 0 125m 192.168.2.177 2-177 <none> <none>

cattle-prometheus exporter-node-cluster-monitoring-t9hbx 1/1 Running 0 125m 192.168.2.176 2-176 <none> <none>

cattle-prometheus exporter-node-cluster-monitoring-tw9n7 1/1 Running 0 125m 192.168.2.185 2-185 <none> <none>

cattle-prometheus grafana-cluster-monitoring-65cbc8749-xddfd 2/2 Running 0 125m 10.42.3.3 2-187 <none> <none>

cattle-prometheus prometheus-cluster-monitoring-0 5/5 Running 1 125m 10.42.2.2 2-175 <none> <none>

cattle-prometheus prometheus-operator-monitoring-operator-5b66559965-4lkvv 1/1 Running 0 125m 10.42.3.2 2-187 <none> <none>

cattle-system cattle-cluster-agent-748d9f6bf6-zgk4x 1/1 Running 0 132m 10.42.0.6 2-176 <none> <none>

cattle-system cattle-node-agent-f2szj 1/1 Running 0 128m 192.168.2.185 2-185 <none> <none>

cattle-system cattle-node-agent-h2hgv 1/1 Running 0 130m 192.168.2.177 2-177 <none> <none>

cattle-system cattle-node-agent-p2t72 1/1 Running 0 129m 192.168.2.187 2-187 <none> <none>

cattle-system cattle-node-agent-wnqg5 1/1 Running 0 132m 192.168.2.176 2-176 <none> <none>

cattle-system cattle-node-agent-zsvvt 1/1 Running 0 129m 192.168.2.175 2-175 <none> <none>

cattle-system kube-api-auth-ddnpp 1/1 Running 0 130m 192.168.2.177 2-177 <none> <none>

cattle-system kube-api-auth-h9mtw 1/1 Running 0 132m 192.168.2.176 2-176 <none> <none>

cattle-system kube-api-auth-wbz96 1/1 Running 0 127m 192.168.2.185 2-185 <none> <none>

ingress-nginx default-http-backend-67cf578fc4-tn4jk 1/1 Running 0 132m 10.42.0.5 2-176 <none> <none>

ingress-nginx nginx-ingress-controller-7dr8g 0/1 CrashLoopBackOff 30 129m 192.168.2.175 2-175 <none> <none>

ingress-nginx nginx-ingress-controller-gcwhl 1/1 Running 0 129m 192.168.2.187 2-187 <none> <none>

ingress-nginx nginx-ingress-controller-hxmpb 1/1 Running 0 130m 192.168.2.177 2-177 <none> <none>

ingress-nginx nginx-ingress-controller-msbw7 1/1 Running 0 127m 192.168.2.185 2-185 <none> <none>

ingress-nginx nginx-ingress-controller-nlhsl 1/1 Running 0 132m 192.168.2.176 2-176 <none> <none>

kube-system coredns-7c5566588d-4ng4f 1/1 Running 0 133m 10.42.0.2 2-176 <none> <none>

kube-system coredns-7c5566588d-q695x 1/1 Running 0 130m 10.42.1.2 2-177 <none> <none>

kube-system coredns-autoscaler-65bfc8d47d-gnns8 1/1 Running 0 133m 10.42.0.3 2-176 <none> <none>

kube-system kube-flannel-cz6kh 2/2 Running 1 130m 192.168.2.177 2-177 <none> <none>

kube-system kube-flannel-dtslx 2/2 Running 2 129m 192.168.2.187 2-187 <none> <none>

kube-system kube-flannel-j7lrd 2/2 Running 0 128m 192.168.2.185 2-185 <none> <none>

kube-system kube-flannel-jm6v8 2/2 Running 0 133m 192.168.2.176 2-176 <none> <none>

kube-system kube-flannel-n8f82 2/2 Running 2 129m 192.168.2.175 2-175 <none> <none>

kube-system metrics-server-6b55c64f86-rxjjt 1/1 Running 0 133m 10.42.0.4 2-176 <none> <none>

kube-system rke-coredns-addon-deploy-job-2c5l2 0/1 Completed 0 133m 192.168.2.176 2-176 <none> <none>

kube-system rke-ingress-controller-deploy-job-5scd5 0/1 Completed 0 132m 192.168.2.176 2-176 <none> <none>

kube-system rke-metrics-addon-deploy-job-7dkc6 0/1 Completed 0 133m 192.168.2.176 2-176 <none> <none>

kube-system rke-network-plugin-deploy-job-2qxj5 0/1 Completed 0 133m 192.168.2.176 2-176 <none> <none>

# 查看K8S 集群状态

root@Qist:~# kubectl --kubeconfig=$kubeconfig get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy "health":"true"

etcd-1 Healthy "health":"true"

etcd-0 Healthy "health":"true"

# 查看所有node

root@Qist:~# kubectl --kubeconfig=$kubeconfig get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

2-175 Ready worker 131m v1.17.2 192.168.2.175 <none> CentOS Linux 7 (Core) 3.10.0-1062.12.1.el7.x86_64 docker://19.3.5

2-176 Ready controlplane,etcd,worker 135m v1.17.2 192.168.2.176 <none> CentOS Linux 7 (Core) 3.10.0-1062.12.1.el7.x86_64 docker://19.3.5

2-177 Ready controlplane,etcd,worker 132m v1.17.2 192.168.2.177 <none> CentOS Linux 7 (Core) 3.10.0-1062.12.1.el7.x86_64 docker://19.3.5

2-185 Ready controlplane,etcd,worker 129m v1.17.2 192.168.2.185 <none> CentOS Linux 7 (Core) 3.10.0-1062.12.1.el7.x86_64 docker://19.3.5

2-187 Ready worker 131m v1.17.2 192.168.2.187 <none> CentOS Linux 7 (Core) 3.10.0-1062.12.1.el7.x86_64 docker://19.3.5最后说明:

# 由于192.168.2.175 部署rancher server 占用80 443 端口所以 nginx-ingress-controller 部署不会成功你可以修改nginx-ingress-controller 让它不部署到 rancher serverk8s master 单节点部署请选择一个节点部署时选择 controlplane etcd 等

rke部署高可用k8s集群(代码片段)

...行版,并且全部组件完全在Docker容器内运行 RancherServer只能在使用RKE或K3s安装的Kubernetes集群中运行节点环境准备 1.开放每个节点的端口 查看详情

使用rancherserver部署本地多节点k8s集群(代码片段)

...似的平台。为此,我查阅了许多参考资料,最后我找到了RancherServer。接下来,我要介绍我是如何设置我的本地K8S多节点集群的。 准备master节点和worker节点的虚拟机 上图显示了集群的架构,一个master节点和3个work... 查看详情

rancher2.2.2-ha部署高可用k8s集群(代码片段)

...,需以高可用的配置安装Rancher,确保用户始终可以访问RancherServer。当安装在Kubernetes集群中时,Rancher将与集群的etcd集成,并利用Kubernetes调度实现高可用。为确保高可用,本文所部署的Kubernetes集群将专用于运行Rancher,Rancher运行... 查看详情

kubeadm部署k8s1.9高可用集群--4部署master节点

部署master节点kubernetesmaster节点包含的组件:kube-apiserverkube-schedulerkube-controller-manager本文档介绍部署一个三节点高可用master集群的步骤,分别命名为k8s-host1、k8s-host2、k8s-host3:k8s-host1:172.16.120.154k8s-host2:172.16.120.155k8s-h 查看详情

k8s单节点改为高可用和更新证书

master单节点添加master节点apiServer添加域名更新证书更新kubenertes证书有效期环境kubernetesv1.22.12使用kubeadm安装的集群#添加节点#生成节点添加token(在master运行)#创建jointoken.Createjointokenkubeadmtokencreate--print-join-command#kubeadmjoinIP:6443--tokenx... 查看详情

高可用的k8s,部署java

搭建高可用k8s的集群2台master,一台node 初始化操作:参考我的其他博客所有master节点部署keepalived1、安装相关包yuminstall-yconntrack-toolslibseccomplibtool-ltdlyuminstall-ykeepalived2、配置master1节点cat>/etc/keepalived/keepalive 查看详情

redis单节点➤redissentinel高可用➤rediscluster集群(代码片段)

文章目录一、Redis单节点启动RedisRedis命令行客户端停止Redis服务二、复制建立复制断开复制三、RedisSentinel高可用部署拓扑结构图部署Redis数据节点部署Sentinel节点部署技巧四、RedisCluster集群准备节点节点握手分配槽redis-trib.rb搭建... 查看详情

redis单节点➤redissentinel高可用➤rediscluster集群(代码片段)

文章目录一、Redis单节点启动RedisRedis命令行客户端停止Redis服务二、复制建立复制断开复制三、RedisSentinel高可用部署拓扑结构图部署Redis数据节点部署Sentinel节点部署技巧四、RedisCluster集群准备节点节点握手分配槽redis-trib.rb搭建... 查看详情

k8s高可用部署:keepalived+haproxy

...nt/tools/kubeadm/ha-topology/#external-etcd-topology堆叠ETCD:每个master节点上运行一个apiserver和etcd,etcd只与本节点apiserver通信。外部ETCD:etcd集群运行在单独的主机上,每个etcd都与apiserver节点通信。官方文档主要是解决了高可用场景下apiserver... 查看详情

[k8s]docker单节点部署rancher(代码片段)

...界面管理k8s集群的工具,本身支持使用Docker启动。 单节点部署只需要dockerrun即可,易用性高,高可用部署可以使用nginx反向代理机制。以下是单节点部署的可选方式: 1.默认Rancher生成自签证书$dockerrun-d--restart=unless-stopped-... 查看详情

高可用集群篇--k8s快速入门及集群部署(代码片段)

...作示例1.2架构原理&核心概念1.2.1整体主从方式1.2.2Master节点架构1.2.3Node节点架构1.3完整概念1.4流程叙述二、k 查看详情

k8s高可用环境部署-1.17.3版本(代码片段)

...AProxy搭建高可用Loadbalancer,完整的拓扑图如下:单个mastre节点将部署keepalived、haproxy、etcd、apiserver、controller-manager、schedule六种服务,loadbalancer集群和etcd集群仅用来为kubernetes集群集群服务,不对外营业。如果必要,可以将loadbala... 查看详情

k8s多master集群二进制部署(代码片段)

...高可用实现方案2、多Master高可用的搭建过程二、多master节点集群搭建(master02节点部署)三、负载均衡部署四、k8s的网站管理系统(DashboardUI)1、Dashboard介绍2、部署DashboardUI3、Dashboard部署步骤总结一、k8s多Master集... 查看详情

k8s多master集群二进制部署(代码片段)

...高可用实现方案2、多Master高可用的搭建过程二、多master节点集群搭建(master02节点部署)三、负载均衡部署四、k8s的网站管理系统(DashboardUI)1、Dashboard介绍2、部署DashboardUI3、Dashboard部署步骤总结一、k8s多Master集... 查看详情

k8s多master集群二进制部署(代码片段)

...高可用实现方案2、多Master高可用的搭建过程二、多master节点集群搭建(master02节点部署)三、负载均衡部署四、k8s的网站管理系统(DashboardUI)1、Dashboard介绍2、部署DashboardUI3、Dashboard部署步骤总结一、k8s多Master集... 查看详情

linux企业运维——k8s高可用集群架构搭建详解(代码片段)

...haproxy3.2、Docker部署3.3、K8s集群部署3.4、K8s集群添加worker节点四、集群高可用性能测试一、K8s高可用集群架构原理Kubernetes的存储层使用的是Etcd。Etcd是CoreOS开源的一个高可用强一致性的分布式存储服务,Kubernetes使用Etcd作为数... 查看详情

linux企业运维——k8s高可用集群架构搭建详解(代码片段)

...haproxy3.2、Docker部署3.3、K8s集群部署3.4、K8s集群添加worker节点四、集群高可用性能测试一、K8s高可用集群架构原理Kubernetes的存储层使用的是Etcd。Etcd是CoreOS开源的一个高可用强一致性的分布 查看详情

linux企业运维——k8s高可用集群架构搭建详解(代码片段)

...署—haproxy2、Docker部署3、K8s集群部署4、K8s集群添加worker节点四、集群高可用性能测试一、K8s高可用集群架构原理Kubernetes的存储层使用的是Etcd。Etcd是CoreOS开源的一个高可用强一致性的分布式存储服务&#x 查看详情