关键词:

AdaBoost的一般算法流程

输入: 训练数据集 \(T = \left \(x_1,y_1), (x_2,y_2), \cdots (x_N,y_N)\right \\),\(y\in\left\-1,+1 \right\\),基学习器\(G_m(x)\),训练轮数M

- 初始化权值分布: \(w_i^(1) = \frac1N\:, \;\;\;\; i=1,2,3, \cdots N\)

- for m=1 to M:

(a) 使用带有权值分布的训练集学习得到基学习器\(G_m(x)\):

\[G_m(x) = \mathop\arg\min\limits_G(x)\sum\limits_i=1^Nw_i^(m)\mathbbI(y_i \neq G(x_i))\]

(b) 计算\(G_m(x)\)在训练集上的误差率:

? \[\epsilon_m = \frac\sum\limits_i=1^Nw_i^(m)\mathbbI(y_i \neq G_m(x_i))\sum\limits_i=1^Nw_i^(m)\]

(c) 计算\(G_m(x)\)的系数: \(\alpha_m = \frac12ln\frac1-\epsilon_m\epsilon_m\)

(d) 更新样本权重分布: \(w_i^(m+1) = \fracw_i^(m)e^-y_i\alpha_mG_m(x_i)Z^(m)\; ,\qquad i=1,2,3\cdots N\)

其中\(Z^(m)\)是规范化因子,\(Z^(m) = \sum\limits_i=1^Nw^(m)_ie^-y_i\alpha_mG_m(x_i)\),以确保所有的\(w_i^(m+1)\)构成一个分布。- 输出最终模型: \(G(x) = sign\left[\sum\limits_m=1^M\alpha_mG_m(x) \right]?\)

- 另外具体实现了real adaboost, early_stopping,weight_trimming和分步预测 (stage_predict,见完整代码)。

import numpy as np

from sklearn import datasets

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import train_test_split

from sklearn.base import clone

from sklearn.metrics import zero_one_loss

import time

class AdaBoost(object):

def __init__(self, M, clf, learning_rate=1.0, method="discrete", tol=None, weight_trimming=None):

self.M = M

self.clf = clf

self.learning_rate = learning_rate

self.method = method

self.tol = tol

self.weight_trimming = weight_trimming

def fit(self, X, y):

# tol为early_stopping的阈值,如果使用early_stopping,则从训练集中分出验证集

if self.tol is not None:

X, X_val, y, y_val = train_test_split(X, y, random_state=2)

former_loss = 1

count = 0

tol_init = self.tol

w = np.array([1 / len(X)] * len(X)) # 初始化权重为1/n

self.clf_total = []

self.alpha_total = []

for m in range(self.M):

classifier = clone(self.clf)

if self.method == "discrete":

if m >= 1 and self.weight_trimming is not None:

# weight_trimming的实现,先将权重排序,计算累积和,再去除权重过小的样本

sort_w = np.sort(w)[::-1]

cum_sum = np.cumsum(sort_w)

percent_w = sort_w[np.where(cum_sum >= self.weight_trimming)][0]

w_fit, X_fit, y_fit = w[w >= percent_w], X[w >= percent_w], y[w >= percent_w]

y_pred = classifier.fit(X_fit, y_fit, sample_weight=w_fit).predict(X)

else:

y_pred = classifier.fit(X, y, sample_weight=w).predict(X)

loss = np.zeros(len(X))

loss[y_pred != y] = 1

err = np.sum(w * loss) # 计算带权误差率

alpha = 0.5 * np.log((1 - err) / err) * self.learning_rate # 计算基学习器的系数alpha

w = (w * np.exp(-y * alpha * y_pred)) / np.sum(w * np.exp(-y * alpha * y_pred)) # 更新权重分布

self.alpha_total.append(alpha)

self.clf_total.append(classifier)

elif self.method == "real":

if m >= 1 and self.weight_trimming is not None:

sort_w = np.sort(w)[::-1]

cum_sum = np.cumsum(sort_w)

percent_w = sort_w[np.where(cum_sum >= self.weight_trimming)][0]

w_fit, X_fit, y_fit = w[w >= percent_w], X[w >= percent_w], y[w >= percent_w]

y_pred = classifier.fit(X_fit, y_fit, sample_weight=w_fit).predict_proba(X)[:, 1]

else:

y_pred = classifier.fit(X, y, sample_weight=w).predict_proba(X)[:, 1]

y_pred = np.clip(y_pred, 1e-15, 1 - 1e-15)

clf = 0.5 * np.log(y_pred / (1 - y_pred)) * self.learning_rate

w = (w * np.exp(-y * clf)) / np.sum(w * np.exp(-y * clf))

self.clf_total.append(classifier)

‘‘‘early stopping‘‘‘

if m % 10 == 0 and m > 300 and self.tol is not None:

if self.method == "discrete":

p = np.array([self.alpha_total[m] * self.clf_total[m].predict(X_val) for m in range(m)])

elif self.method == "real":

p = []

for m in range(m):

ppp = self.clf_total[m].predict_proba(X_val)[:, 1]

ppp = np.clip(ppp, 1e-15, 1 - 1e-15)

p.append(self.learning_rate * 0.5 * np.log(ppp / (1 - ppp)))

p = np.array(p)

stage_pred = np.sign(p.sum(axis=0))

later_loss = zero_one_loss(stage_pred, y_val)

if later_loss > (former_loss + self.tol):

count += 1

self.tol = self.tol / 2

else:

count = 0

self.tol = tol_init

if count == 2:

self.M = m - 20

print("early stopping in round , best round is , M = ".format(m, m - 20, self.M))

break

former_loss = later_loss

return self

def predict(self, X):

if self.method == "discrete":

pred = np.array([self.alpha_total[m] * self.clf_total[m].predict(X) for m in range(self.M)])

elif self.method == "real":

pred = []

for m in range(self.M):

p = self.clf_total[m].predict_proba(X)[:, 1]

p = np.clip(p, 1e-15, 1 - 1e-15)

pred.append(0.5 * np.log(p / (1 - p)))

return np.sign(np.sum(pred, axis=0))

if __name__ == "__main__":

#测试各模型的准确率和耗时

X, y = datasets.make_hastie_10_2(n_samples=20000, random_state=1) # data

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=1)

start_time = time.time()

model_discrete = AdaBoost(M=2000, clf=DecisionTreeClassifier(max_depth=1, random_state=1), learning_rate=1.0,

method="discrete", weight_trimming=None)

model_discrete.fit(X_train, y_train)

pred_discrete = model_discrete.predict(X_test)

acc = np.zeros(pred_discrete.shape)

acc[np.where(pred_discrete == y_test)] = 1

accuracy = np.sum(acc) / len(pred_discrete)

print(‘Discrete Adaboost accuracy: ‘, accuracy)

print(‘Discrete Adaboost time: ‘, ‘:.2f‘.format(time.time() - start_time),‘\n‘)

start_time = time.time()

model_real = AdaBoost(M=2000, clf=DecisionTreeClassifier(max_depth=1, random_state=1), learning_rate=1.0,

method="real", weight_trimming=None)

model_real.fit(X_train, y_train)

pred_real = model_real.predict(X_test)

acc = np.zeros(pred_real.shape)

acc[np.where(pred_real == y_test)] = 1

accuracy = np.sum(acc) / len(pred_real)

print(‘Real Adaboost accuracy: ‘, accuracy)

print("Real Adaboost time: ", ‘:.2f‘.format(time.time() - start_time),‘\n‘)

start_time = time.time()

model_discrete_weight = AdaBoost(M=2000, clf=DecisionTreeClassifier(max_depth=1, random_state=1), learning_rate=1.0,

method="discrete", weight_trimming=0.995)

model_discrete_weight.fit(X_train, y_train)

pred_discrete_weight = model_discrete_weight.predict(X_test)

acc = np.zeros(pred_discrete_weight.shape)

acc[np.where(pred_discrete_weight == y_test)] = 1

accuracy = np.sum(acc) / len(pred_discrete_weight)

print(‘Discrete Adaboost(weight_trimming 0.995) accuracy: ‘, accuracy)

print(‘Discrete Adaboost(weight_trimming 0.995) time: ‘, ‘:.2f‘.format(time.time() - start_time),‘\n‘)

start_time = time.time()

mdoel_real_weight = AdaBoost(M=2000, clf=DecisionTreeClassifier(max_depth=1, random_state=1), learning_rate=1.0,

method="real", weight_trimming=0.999)

mdoel_real_weight.fit(X_train, y_train)

pred_real_weight = mdoel_real_weight.predict(X_test)

acc = np.zeros(pred_real_weight.shape)

acc[np.where(pred_real_weight == y_test)] = 1

accuracy = np.sum(acc) / len(pred_real_weight)

print(‘Real Adaboost(weight_trimming 0.999) accuracy: ‘, accuracy)

print(‘Real Adaboost(weight_trimming 0.999) time: ‘, ‘:.2f‘.format(time.time() - start_time),‘\n‘)

start_time = time.time()

model_discrete = AdaBoost(M=2000, clf=DecisionTreeClassifier(max_depth=1, random_state=1), learning_rate=1.0,

method="discrete", weight_trimming=None, tol=0.0001)

model_discrete.fit(X_train, y_train)

pred_discrete = model_discrete.predict(X_test)

acc = np.zeros(pred_discrete.shape)

acc[np.where(pred_discrete == y_test)] = 1

accuracy = np.sum(acc) / len(pred_discrete)

print(‘Discrete Adaboost accuracy (early_stopping): ‘, accuracy)

print(‘Discrete Adaboost time (early_stopping): ‘, ‘:.2f‘.format(time.time() - start_time),‘\n‘)

start_time = time.time()

model_real = AdaBoost(M=2000, clf=DecisionTreeClassifier(max_depth=1, random_state=1), learning_rate=1.0,

method="real", weight_trimming=None, tol=0.0001)

model_real.fit(X_train, y_train)

pred_real = model_real.predict(X_test)

acc = np.zeros(pred_real.shape)

acc[np.where(pred_real == y_test)] = 1

accuracy = np.sum(acc) / len(pred_real)

print(‘Real Adaboost accuracy (early_stopping): ‘, accuracy)

print(‘Discrete Adaboost time (early_stopping): ‘, ‘:.2f‘.format(time.time() - start_time),‘\n‘)输出结果:

Discrete Adaboost accuracy: 0.954

Discrete Adaboost time: 43.47

Real Adaboost accuracy: 0.9758

Real Adaboost time: 41.15

Discrete Adaboost(weight_trimming 0.995) accuracy: 0.9528

Discrete Adaboost(weight_trimming 0.995) time: 39.58

Real Adaboost(weight_trimming 0.999) accuracy: 0.9768

Real Adaboost(weight_trimming 0.999) time: 25.39

early stopping in round 750, best round is 730, M = 730

Discrete Adaboost accuracy (early_stopping): 0.9268

Discrete Adaboost time (early_stopping): 14.60

early stopping in round 539, best round is 519, M = 519

Real Adaboost accuracy (early_stopping): 0.974

Discrete Adaboost time (early_stopping): 11.64 - 可以看到,weight_trimming对于Discrete AdaBoost的训练速度无太大提升,而对于Real AdaBoost则较明显,可能原因是Discrete AdaBoost每一轮的权重较分散,而Real AdaBoost的权重集中在少数的样本上。

- early_stopping分别发生在750和539轮,最后准确率也可以接受。

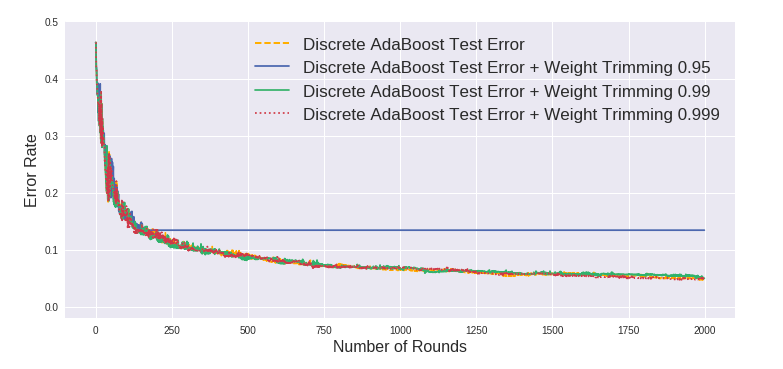

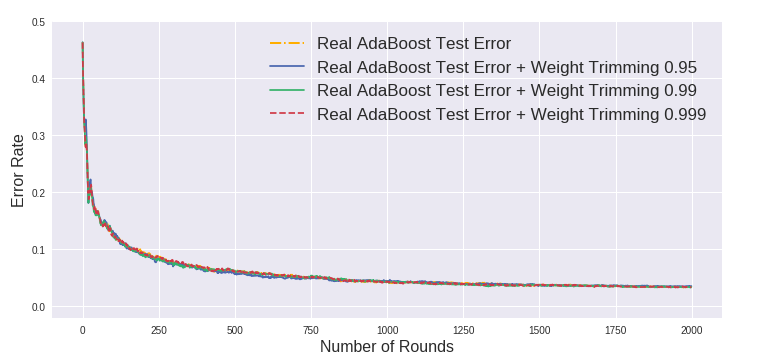

下两张图显示使用weight_trimming的情况下准确率与正常AdaBoost相差无几 (除了0.95的情况)。

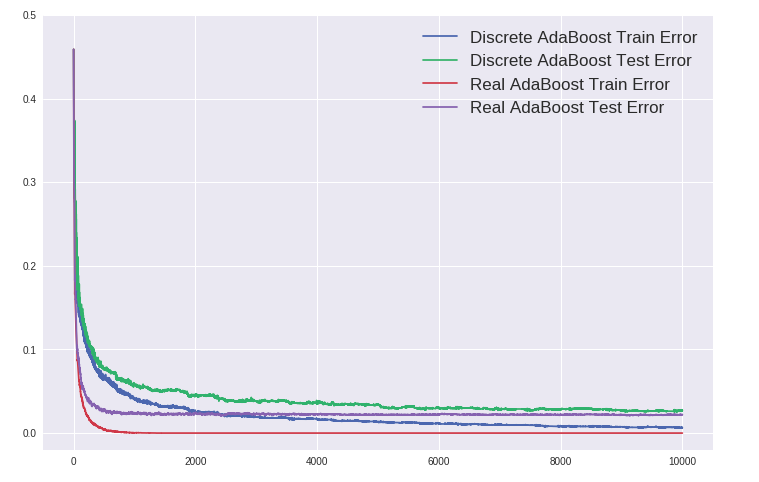

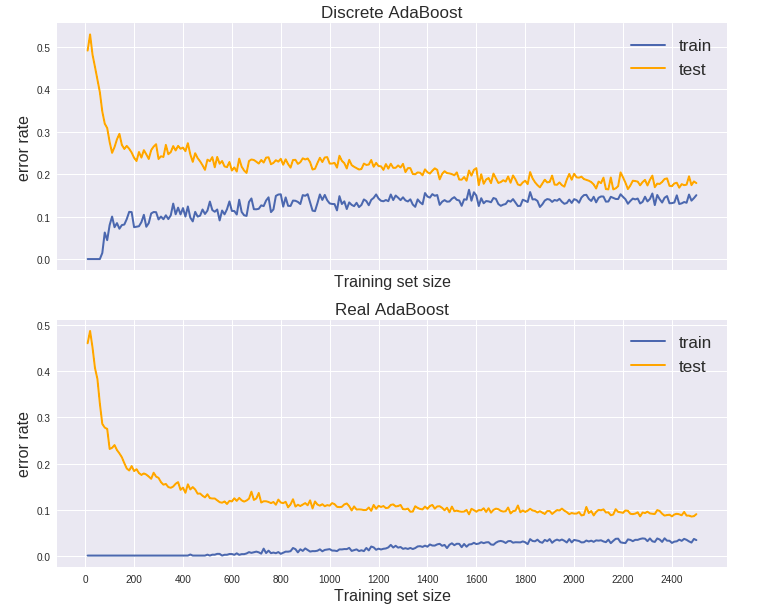

Discrete AdaBoost vs. Real AdaBoost - Overfitting

AdaBoost有一个吸引人的特性,那就是其“不会过拟合”,或者更准确的说法是在训练误差下降到零之后继续训练依然能提高泛化性能。如下图所示,训练10000棵树,Real AdaBoost的训练误差早早下降为零,而测试误差几乎平稳不变。而且可以看到 Real AdaBoost 对比 Discrete AdaBoost 无论是训练速度还是准确率都更胜一筹。

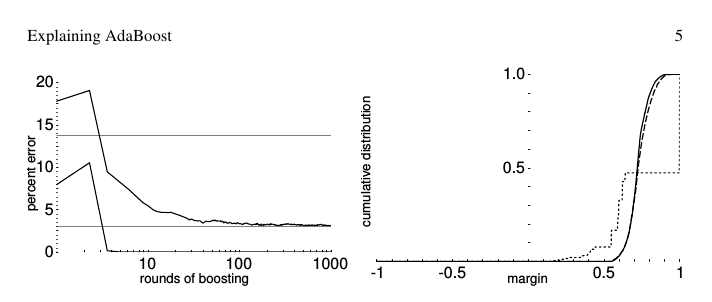

Margin理论可以解释这个现象,认为随着训练轮数的增加,即使训练误差已经至零,对于训练样本预测的margin依然会扩大,这等于会不断提升预测的信心。但过去十几年来学术界一直对该理论存在争议,具体可参阅AdaBoost发明者的论文 [Schapire, Explaining AdaBoost]。

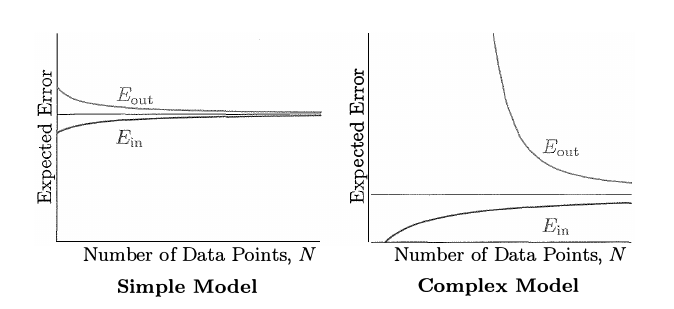

Learning Curve

Learning Curve是另一种评估模型的方法,反映随着训练集的增大,训练误差和测试误差的变化情况。通常如果两条曲线比较接近且误差都较大,为欠拟合;如果训练集误差率低,测试集误差率高,二者的曲线会存在较大距离,则为过拟合。

下面来看AdaBoost在上面数据集中的learning curve:

这里总共只选用了5000个数据 (2500训练集 + 2500测试集),因为learning curve的绘制通常需要拟合N个模型 (N为训练样本数),计算量太大。从上图来看Discrete AdaBoost是欠拟合,而Real AdaBoost比较像是过拟合,如果进一步增加数据,Real AdaBoost的测试误差率可能会进一步下降。

完整代码

/

集成学习之adaboost算法原理小结

...代表算法就是是boosting系列算法。在boosting系列算法中,Adaboost是最著名的算法之一。Adaboost既可以用作分类,也可以用作回归。本文就对Adaboost算法做一个总结。1.回顾boosting算 查看详情

机器学习之adaboost算法原理

...代表算法就是是boosting系列算法。在boosting系列算法中,Adaboost是最著名的算法之一。Adaboost 查看详情

集成学习之adaboost算法

1.回顾Boosting提升算法AdaBoost是典型的Boosting算法,属于Boosting家族的一员。在说AdaBoost之前,先说说Boosting提升算法。Boosting算法是将“弱学习算法“提升为“强学习算法”的过程,主要思想是“三个臭皮匠顶个诸葛... 查看详情

机器学习——集成学习之boosting

...blog.csdn.net/woaidapaopao/article/details/77806273?locationnum=9&fps=1AdaBoostGBDTXgboost1.AdaBoostBoosting的本质实际上是一个加法模型,通过改变训练样本权重学习多个分类器并进行一些线性组合。而Adaboost就是加法模型+指数损失函数+前项分布算... 查看详情

机器学习之adaboost

Adaboost是一种组合学习的提升算法,能将多个弱学习算法(甚至只比随机猜测好一点)组合起来,构成一个足够强大的学习模型。组合学习组合学习是将多个假说组合起来,并集成它们的预测。比如对于一个问题,我们可以生成2... 查看详情

机器学习之集成学习

1.概念梳理:AdaBoost:运行过程:训练数据中的每一个样本,并赋一个权重,这些权重值构成向量D,已开始这些权重值一样。第一次训练完,得到一个弱分类器,计算该分类器的错误率,然后调整每个样本的权重值,对同一个训... 查看详情

集成学习之梯度提升树(gbdt)算法

...,本文统一简称GBDT。GBDT也是Boosting算法的一种,但是和AdaBoost算法不同(AdaBoost算法上一篇文章已经介绍);区别如下:AdaBoost算法是利用前一轮的弱学习器的误差来更新样本权重值,然后一轮一轮的迭代;GBDT也是迭代,使用了... 查看详情

梯度提升树(gbdt)原理小结

转刘建平Pinard在集成学习之Adaboost算法原理小结中,我们对Boosting家族的Adaboost算法做了总结,本文就对Boosting家族中另一个重要的算法梯度提升树(GradientBoostingDecisonTree,以下简称GBDT)做一个总结。GBDT有很多简称,有GBT(GradientBoosti... 查看详情

adaboost原理和实现

上两篇说了决策树到集成学习的大概,这节我们通过adaboost来具体了解一下集成学习的简单做法。集成学习有bagging和boosting两种不同的思路,bagging的代表是随机森林,boosting比较基础的adaboost,高级一点有GBDT,在这里我也说下我... 查看详情

集成学习实战——boosting(gbdt,adaboost,xgboost)

集成学习实践部分也分成三块来讲解:sklearn官方文档:http://scikit-learn.org/stable/modules/ensemble.html#ensemble1、GBDT 2、XGBoost 3、Adaboost在sklearn中Adaboost库分成两个,分别是分类和回归AdaBoostClassifier和AdaBoostRegressor对于集成学习 查看详情

集成学习——boosting(gbdt,adaboost,xgboost)

...赖串行而成的算法,目前主流的主要有三个算法:GBDT,Adaboost,XGBoost这个链接可以看看:https://www.cnblogs.com/willnote/p/6801496.htmlboosting算法的原理如下: 1、GBDT 2、XGBoost 3、Ad 查看详情

机器学习集成学习(boosting)——adaboost提升算法(理论+图解+公式推导)

...待着您的光临~文章目录一、集成学习二、AdaBoost算法1.Boosting提升方法2.AdaBoost算法思想3.AdaBoost原理解释4.构造损失函数,求解参数5.前向分步算法2021人工智能领域新... 查看详情

机器学习算法:boosting集成原理和实现过程

...个弱学习器,整体能力就会得到提升代表算法:Adaboost,GBDT,XGBoost1.2实现过程:1.训练第一个学习器 2 查看详情

ml-6-2集成学习-boosting(adaboost和gbdt)

目录简述集成学习Boosting介绍AdaBoost算法GBDT算法总结一、简述集成学习上一篇博文已经介绍了:集成算法是由多个弱学习器组成的算法,根据个体学习器的生成方式不同,集成算法分成两类:个体学习器之间不存在强依赖关系,... 查看详情

一文详解机器学习中最好用的提升方法:boosting与adaboost(代码片段)

...f0c;它们都强大无比。而本文作者从最基础的Boosting概念到AdaBoost算法进行了详细的介绍,并展示了如何实现AdaBoost,这些都是走进集成方法大家族的敲门砖。最近,Boosting技术在Kaggle竞赛以及其它预测分析任务中大行其... 查看详情

课时boost与adaboost

...正则项的定义目标函数的计算目标函数继续化简子树划分Adaboost误差上限方差与偏差Bagging能够减少训练方差,对于不剪枝的决策树、神经网络等学习器有良好的集成效果Boosting减少偏差,能够基于泛化能力较弱的学习器构造强学... 查看详情

集成学习之随机森林案例专题python机器学习系列(十七)(代码片段)

集成学习之随机森林案例专题【Python机器学习系列(十七)】文章目录1.Bagging与随机森林简介2.随机森林--分类任务2.1准备数据2.2python实现随机森林--分类任务2.3绘制ROC曲线与计算AUC2.4绘制决策树3.随机森林--回归任务集成... 查看详情

机器学习之集成学习

集成学习(ensemblelearning)通过构建并结合多个学习期来完成学习任务,同质学习器和异质学习器。弱学习器:泛化性能略优于随机猜测的学习器集成学习通过过个学习器进行结合,可以获得比单一学习器显著优越的泛化性能集... 查看详情